March 11, 2006

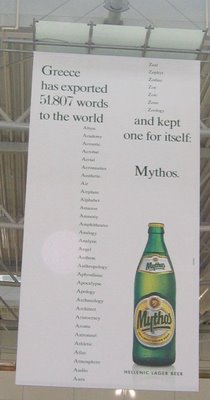

51,807

People love to count words. I mean, exactly.

I'm sympathetic, and have some numbers of my own to contribute. Last night and this morning, in the process of working towards some corpus-based lexicography in Bengali, I wgot 12,328 .htm files from a journalistic site, containing 2,771,625 Bengali word tokens representing 91,407 distinct Bengali wordform types, with the commonest 16,848 wordforms giving 95% coverage of the tokens in the collection.

When I think back to what things were like even 15 or 20 years ago, this amazes me. The whole business required the following steps, taking between one and two minutes of my time in total:

(1) Find a promising source of Bengali text, by searching Google with a Unicode string for a common Bengali word. I used আমাদের, selected from the BBC News Bengali page (it's not the BBC site whose statistics I report -- the Beeb only keeps a few thousand words of text there, as far as I was able to determine). Time: 30 seconds.

(2) Use wget to download all the html pages from one (promising-looking journalistic) site. This was a one-line command on my desktop linux box. Time to issue the command: 10 seconds. (The download took several hours, but I was asleep at the time.)

(3) Find all the Bengali words in the downloaded material. This required me to write a short shell script

for n in `find . -name "*htm*" -print` do getbengali <$n done | hist >BengaliHarvest1.hist

which calls a short perl program to extract Bengali word tokens from an arbitrary file:

#!/usr/bin/perl

use utf8;

binmode(STDOUT, ":utf8");

binmode(STDIN, ":utf8");

my $n; my $state = 0;

# 0 = start

# 1 = within Bengali

# 2 = within non-initial non-Bengali

while (<>) {

while ($_ =~ s/^(.)//) {

$n = ord($1);

if($n >= 0x981 && $n <= 0x9FA){ # Bengali unicode range

$state = 1; print("$1");

}

elsif($state == 1){ # start of non-Bengali

$state = 2; print("\n");

}

}

}

I'll confess that it took me a while to learn about binmode() and ord() -- my first few tries at writing the perl program to pull out Bengali words failed mysteriously, and I actually had to read skim the documentation on how to deal with utf8 in perl. However, I went through that learning process a week ago, so I already had the "getbengali" program in hand -- as well as a simple program to compute lexical histograms, written many years ago -- and therefore all I had to do was write the little shell script. Time this morning needed: 30 seconds. (Well, that was my time -- the script ran while I was preparing and eating a bowl of oatmeal.)

The total amount of my time needed to obtain a 2.7M-word corpus of Bengali and compute the frequencies of wordforms in it: about a minute and a half. I invested another minute or so in creating and browsing a concordance. I imagine that somewhere, Hugo of St. Cher is smiling and shaking his head. The 500 Dominican monks who worked for him may be signaling with a different body part.

[For those of you who are not text encoding geeks, or perhaps not even all that interested in computers, here's a fun story. (The rest of you may enjoy it too.)

The encoding known today as UTF-8 was invented by Ken Thompson. It was born during the evening hours of 1992-09-02 in a New Jersey diner, where he designed it in the presence of Rob Pike on a placemat (see Rob Pike’s UTF-8 history). It replaced an earlier attempt to design a FSS/UTF (file system safe UCS transformation format) that was circulated in an X/Open working document in August 1992 by Gary Miller (IBM), Greger Leijonhufvud and John Entenmann (SMI) as a replacement for the division-heavy UTF-1 encoding from the first edition of ISO 10646-1. By the end of the first week of September 1992, Pike and Thompson had turned AT&T Bell Lab’s Plan 9 into the world’s first operating system to use UTF-8.

All too often, we neglect the continued important role of scraps of paper in technological history.]

[I should also add that I don't know any Bengali. How I came to be involved in a bit of Bengali corpus-based lexicography is a another story.]

[And I hope it goes without saying that counting words so precisely is actually very silly, at least with reference to questions like "how many words did English borrow from Greek?", or "how many words are there in English?" ...]

[Update: Ben Zimmer points to this quote from My Big Fat Greek Wedding:

Give me a word, any word, and I show you that the root of that word is Greek.

]

Posted by Mark Liberman at March 11, 2006 08:37 AM