November 30, 2003

Twanged

In the light of Mark's posting about the remark attributed to Guy Bailey about the origin of Southern r-lessness, I should withdraw my captious comments. No one knows better than I how the Times' editors (like others) occasionally make cuts that can leave a misleading impression, particularly when they're working under deadline -- and unfortunately, as I can also testify, it's always the writer who gets the mail.Twang scholar on "the constraints of journalism"

I figured it was something like that.

It was great to see Ralph Blumenthal's piece on "Scholars of Twang" featured so prominently in the New York Times yesterday. It's not often that we see an engaging story about an interesting linguistic project on the front page of a national newspaper! But there were a couple of puzzling things in the interview with Guy Bailey that formed the core of the article. One was an account of the origins of U.S. r-lessness in terms of plantation owners sending their sons to England for schooling. Another was Guy's response when asked where fixin' to came from: "who knows?"

Pretty much any linguistically well-informed person would be puzzled about these aspects of the story, as Geoff Nunberg and I were, because there is a well-known story about the American distribution of r-lessness that is more complicated but also more interesting, and there is an obvious sort of answer about fixin' to, in terms of the specific history of fix and the general tendency of verbs of intention or preparation to get semantically bleached into mere tense or aspect. And I'd have bet money that Professor Bailey knows all of this much better than I do.

So I wrote to Guy to ask him what happened. His response with respect to r-lessness (posted with permission):

It was good to hear from you. The article was nice, but the stuff on the origins of r-lessness reflects the constraints of journalism. When asked about the origins of r-lessness in the U.S., I offered two or three different theories (including colonial education in England, an old theory by the way) and indicated that in the South, r-lessness was probably heavily influenced by the speech of slaves. Ralph (I assume, although editors may have shortened the article) chose to write about only one of them. Unfortunately, it's not the one I favor, at least for the South. On the whole though, Ralph did a good job.

And with respect to fixin' to:

The comment on fixin to was also part of a much longer explanation. I began by saying "who knows?" and then outlining "one possibility" -- a long, involved step by step process that Jan worked out a decade or so ago (but which she hasn't published) using OED and other dictionary citations. I have to admit that her derivation probably wouldn't make good news copy, although it is a process that parallels the similar grammaticalization of gonna.

In other words, as I thought, a combination of journalistic focus and editorial compression led to Guy being quoted in a way that doesn't accurately reflect what he knows and what he thinks.

This happens all the time, and not just to linguists. I've hardly ever read a piece of popular journalism, on a topic where I have independent knowledge, that didn't have at least one instance of this sort of thing. Journalists do misunderstand sometimes, and they want a good story, and they need a short one.

Does it matter? Well, it can be personally annoying -- and sometimes professionally embarrassing -- to be made to seem to say things that one didn't say and didn't mean. Also, the content of the mistake is sometimes significant. In this case, as Geoff Nunberg observed, a social change is attributed to social influence from above (rich kids schooled in England), instead of social influence from below (the effect of the speech of slaves). On balance, though, I feel that the result (an entertaining story about linguistics on the front page of the New York Times) is well worth the cost (a couple of misrepresentations of Guy Bailey's views on linguistic history). Of course, that's easy for me to say, I'm not the one being (mis)quoted. But more of us should be willing to take the risk.

I'll let Professor Bailey (who is also provost of the University of Texas at San Antonio) have the last word:

One thing we as linguists probably need to do is to figure out how to make technical linguistic descriptions easily available to a public which has a more general education. Interestingly enough, as an administrator, I always try to give reporters sound bites that reflect the message UTSA wants communicated; as a linguist, I never do.

November 29, 2003

How's your copperosity sagaciating?

Geoff Nunberg objects to the New York Times' quotation of Guy Bailey to the effect that r-lessness spread in Texas from the children of plantation owners who went to England for schooling and picked up the fashion there. I don't know whether that's what Guy really said -- it wouldn't be the first time that the NYT got a quotation or attribution garbled. And certainly both Nunberg and Bailey know a lot more about this than I do.

But in the course of putting together a lecture for an undergraduate course, I happen to have stumbled over a fascinating bit of trivia about r-lessness in 19th-century America, involving Uncle Remus, James Joyce, and the British recognition of the Republic of Texas. So here goes.

Loss of syllable-final /r/ was a change in progress in England in colonal times, variably distributed by geography and social class. As a result, the complex geographical and social patterns of r-lessness in the U.S. could logically have three sources: settlement patterns, patterns of continued contact with England, and local sociolinguistic dynamics.

The traditional account (as e.g. in (Richard) Bailey 1996 and Lass 1992) was that loss of postvocalic /r/ in England was a 17th and 18th century phenomenon. Thus r-lessness would have been widespread (but not universal) during the period when English speakers emigrated to North America, and thus settlement patterns are a likely source of influence.

However, recent research suggests that "... most of England was still rhotic ... at the level of urban and lower-middle-class speech in the middle of the nineteenth century, and that extensive spreading of the loss of rhoticity is something that has occurred subsequently..." (Peter Trudgill, "A Window on the Past: "Colonial Lag" and New Zealand Evidence for the Phonology of Nineteenth-Century English". American Speech 74(3) 1999).

If this is true, then U.S. settlement patterns are less relevant, and patterns of contact with England are more relevant. Prof. Bailey may have some evidence about this, I don't know.

However, I do want to cite one interesting piece of evidence in favor of an earlier adoption of r-lessness in the American south in general and Texas in particular.

On this web page, one Mike Schwitzgebel cites his Ohio grandfather's use of the word "copperosity". He tracks this via the OED to corporosity, "Bulkiness of body. Also used in a humorous title or greeting', with a citation to James Joyce Ulysses 418 "Your corporosity sagaciating O K? ". This in turn is apparently a reference to Joel Chandler Harris' The Tar Baby and other Tales of Uncle Remus, where "copperosity" and "segashuate" represent the African-American vernacular pronunciations of these words.

Schwitzgebel tracks the Harris/Joyce greeting further to Nicholas Doran P. Maillard's 1842 History of the Republic of Texas. Maillard was a British lawyer who lived in Richmond, Texas, for about nine months during the year 1840. His book was a virulent anti-Texas screed, published in the hope of influencing British public opinion against diplomatic recognition of the Republic of Texas. Maillard describes the infant republic as "stained with the crime of Negro slavery and Indian massacre", and "filled with habitual liars, drunkards, blasphemers, and slanderers; sanguinary gamesters and cold-blooded assassins; with idleness and sluggish indolence (two vices for which the Texans are already proverbial); with pride, engendered by ignorance and supported by fraud." Maillard also cites "How does your copperosity sagaciate this morning?" as a typical Texas greeting.

Make of it what you will. Myself, I've got a bunch of people coming this afternoon for a traditional Thanksgiving dinner on a non-traditional day, and I need to go get the neo-turkey into the post-thanksgiving oven.

[Update: now that the turkey is stuffed and in the oven, and other preparations are well underway, I need to add that I don't subscribe to Maillard's description as an accurate characterization of Texans, whether in 1840 or 2003, and especially not of my wife.]

November 28, 2003

Deep in the Hawt of Texath

A piece in today's NY Times on the etiology of the Texas twang was sounding pretty reasonable for the genre, until I came on the following:The opposite syndrome, known as r-lessness, which renders "four" as "foah" in Texas and elsewhere, is easier to trace, Dr. Bailey said. In the early days of the republic, plantation owners sent their children to England for schooling. "They came back without the `r,' " he said. "The parents were saying, listen to this, this is something we have to have, so we'll all become r-less," he said. The craze went down the East Coast from Boston to Virginia (skipping Philadelphia, for some reason) and migrating selectively around the country.That would be Guy Bailey, a linguistics professor at the University of Texas at San Antonio, who sounds here as if he's ignorant of the foundational research by Kurath and McDavid and others that established the connection between American dialect features and patterns of early settlement -- or maybe he's just unwilling to let go of a good tale.

The idea that American r-lessness arose because rich families sent their children to England for schooling is pure nonsense, of course -- there's no evidence that this was a widespread practice in either New England or the South, or for that matter for any r-lessness craze in American history. And in any case, that sort of story isn't required to explain r-lessness, given both the history of settlement and the role of autochthonous changes, as elaborated by Labov and many others.

But people seem to enjoy these anecdotes about the origins of linguistic features, like the classic story of the lisping King of Spain. That testifies to the popular tendency to think of language as a superficial social practice that changes in response to the sway of fashion, the same assumption that makes it easy to believe that systematic syntactic and phonological changes arise out of mere carelessness or affectation. The Times reporter couldn't have known any better, of course. But what was Bailey thinking of?

Talking seals and singing dogs

I don't normally read the Guardian, so I missed this Nov. 4 story about how Tecumseh Fitch is spending a sabbatical at St. Andrew's University trying to teach seals to talk. I'm 100% in favor of this effort -- more talking seals would be a step in the right direction, in my unsolicited opinion.

Despite my positive emotional response to talking animals, I've never taught an animal to speak, not even a parrot or a mynah. It's definitely one of the those things about my life I would regret, if the question came up. I once taught a dog to sing, though.

Well, I'm exaggerating. A disinterested observer might conclude that it was the dog who taught me to sing. Here's the true story.

In the summer of 2000, I was dog-sitting for Rich and Sally Thomason at their cabin in rural Montana. Once a week, I had to drive an hour to the nearest supermarket to do the shopping, and course Kwala would come with me.

When I played music in the car, I discovered that at certain points, Kwala would howl along. Her favorites were the soulful climaxes of country-western ballads and the tutti passages of Mozart orchestral works. She seemed to me to be entraining the timing of her howls to the rhythm of the music, and even sometimes matching pitches, but we humans tend to hear parallel sequences of complex sounds as being more correlated than they are, so I wasn't sure.

I found that Kwala would sing much more reliably if I sang too, even in works that did not interest her in themselves. Scientific motives aside, I enjoyed the experience. I have to confess that I am not much of a singer, and so I was happy to find that Kwala appreciated my efforts. She liked to sit behind me and rest her head on my shoulder, next to the open window, while we sang together along with the radio or a CD.

We got some very strange looks from a Suburban full of elderly fishermen, one hot July afternoon, when we pulled into the parking lot of the Bigfork IGA belting out "non più andrai".

I convinced myself that Kwala was definitely coordinating her vocalizations with mine, though I made no attempt to document this scientifically. Think of how many other mute inglorious canine Pavarottis may be out there!

For those who are more visually oriented, here are some pictures of Kwala that I put up on the web from Montana to reassure her distant owners that all was well. And courtesy of Prof. Hendler at Mindswap, here is today's application of the Universal Marketing Graphic (UMG), in this case illustrating the prospects for the development of talking seals:

While there has only been one talking seal so far, and he's dead, Tecumseh Fitch is on the case, and there are millions of seals out there to teach...

[Link to the Guardian story via mirabilis.ca].

November 27, 2003

Like, I care whether semantics are or is?

Mark Liberman quotes some remarks from a costume designer named William Ivey Long that include the clause "the semantics are confusing" and suggests in connection therewith that "Geoff Pullum will not be pleased to see that Mr. Long interpreted semantics as a plural count noun."

I think I can speak to this, what with me being Geoff Pullum and all, and I am here to tell you that you would be absolutely astonished to know just how little I care about whether some costume designer treated the morphologically pluralized lexeme semantics as a morphosyntactically plural count noun. I mean, it's not just a question of neither being pleased about Mr. Long's choice of verb form nor not pleased; we are talking about a deep and unbounded apathy here, a cosmically profound level of apathy down to which few people's refusal to give a monkey's fart ever descends. The depth of how much I deeply do not care about this would be impossible to overstate, though I will try. Why, just the other day I retired for a while to a fairly small room of my house and sat quietly reading there for, oh, a long time, without any thought of what suffixes dress choosers in New York were putting on verbs that had nouns like semantics as subject in a finite clause. I spend whole days sometimes not thinking about the verb agreement selection Mr. Long made -- indeed, not thinking about the inflectional decisions made by any parade costume designer anywhere. Let me try to explain further just how slender are the chances of my coming to care about this...

Oh, what's the point. People will always think I care about crap like subject-verb agreement. Let's face it, I'm a grammarian. No one is ever going to think I am anything but a boring old pedant. Not ever. No one realizes that I am actually a super fun wild and crazy guy, great in bed, sexy, witty, lively at parties, popular with children and animals. Even if people were to be shown a picture with parrots in the wild peacefully sitting on me they still wouldn't believe it. Sniff.

LA emancipates electronic components

Unless the Onion has captured CNN's website, this must be serious. I looked around for a similar initiative about male and female plug types -- talk about stereotyped interactions! -- but couldn't find one.

Allegation of "forced fermatic practices"

Verity Stob at The Register has a scoop about "US software and litigation giant Softwron Inc".

Defying a "blanket gagging injunction," The Register cites a rumor in the Usenet newsgroup sci.math.research to the effect that a patented Softwron number "and two other 'large' integers together ganged up on an unwilling smaller (but technically oversize) integer and forced it to indulged in Fermatic practices with them."

The article quote Rock McDosh, founder and CEO of Softwron, as follows:

"We categorically state that no number protected by Softwron patent has been involved in any rumoured inappropriate behaviour; and in any case we do not accept that such behaviour is inappropriate, if it could be stated what it was. Nonetheless, if going forward it were generally known what it was, our number would still not be involved in whatever it is. Which it isn’t."

According to a quoted expert, "This kind of incident is highly embarrassing for Softwron right now, but I don’t think it will ever go to court. What you have to remember is that the US Government never ratified Fermat’s Law, which it views as being anti free trade."

At the end of the article, there are links to four other Stob stories on the patenting of numbers.

In this context I'd like to draw the reader's attention to Eben Moglen's article Anarchism Triumphant, which is a serious (though entertaining) meditation, from a lawyer's perspective, on the general problem of intellectual property rights in a world that "consists increasingly of nothing but large numbers (also known as bitstreams)".

Professor Moglen's article contains this memorable passage:

No one can tell, simply by looking at a number that is 100 million digits long, whether that number is subject to patent, copyright, or trade secret protection, or indeed whether it is "owned" by anyone at all. So the legal system we have ... is compelled to treat indistinguishable things in unlike ways.

Now, in my role as a legal historian concerned with the secular (that is, very long term) development of legal thought, I claim that legal regimes based on sharp but unpredictable distinctions among similar objects are radically unstable. They fall apart over time because every instance of the rules' application is an invitation to at least one side to claim that instead of fitting in ideal category A the particular object in dispute should be deemed to fit instead in category B, where the rules will be more favorable to the party making the claim. This game - about whether a typewriter should be deemed a musical instrument for purposes of railway rate regulation, or whether a steam shovel is a motor vehicle - is the frequent stuff of legal ingenuity. But when the conventionally-approved legal categories require judges to distinguish among the identical, the game is infinitely lengthy, infinitely costly, and almost infinitely offensive to the unbiased bystander.

I'm not sure that Prof. Moglen is right about this -- large numbers seem as at least as distinguishable to me as large collections of elementary particles are -- but you should read the whole thing.

Six nouns deep or more

It did cross my mind (I confess it) that Mark Liberman and Bill Poser might be making up their exotic-looking noun-noun-noun-noun-noun-noun compounds ( Volume Feeding Management Success Formula Award and East-ward Communist-Party Lifestyle Consultation Center and so on); but yesterday I found that I was being required to write a letter of formal response to the Narrative[1] Evaluation[2] Student[3] Grievance[4] Hearing[5] Committee[6] on my campus. This was because of a couple of students who objected to the F grades I gave them after a winter[1] quarter[2] undergraduate[3] computer[4] science[5] course[6] assignment[7] plagiarism[8] incident[9]. They really are all around us, these compounds that are six nouns deep or more.

Incidentally, if you want to know how to work out how many different bracketings there are for a string of N nouns, the answer is given by the function f such that f(1) = 1 and for each N > 0 you compute f(N) by taking the sum of all the products of all the f(i) values for all the non-singleton sequences of nonzero choices of i that add up to N.

For 2 this comes to 1, because the only list of positive integers that has more than one item and adds up to 2 is <1, 1>, and f(1) times f(1) = 1. For 3, the value of f comes out to 3, because we have 3 different lists of positive integers that add up to 3: <1, 1, 1>, <1, 2>, and <2, 1>; and when we take the products of all the f(i) for the integers i in each list we get f(1) times f(1) times f(1) = 1, and f(1) times f(2) = 1, and f(2) times f(1) = 1, and when we sum the products we get 1 + 1 + 1 = 3. This corresponds to the fact there are three bracketings for lifestyle consultation center: [lifestyle consultation center], [[lifestyle consultation] center], and [lifestyle [consultation center]].

To work out f(N) for N = 6, and thus the bracketings for volume feeding management success formula award or Narrative Evaluation Student Grievance Hearing Committee, just make a table of all the values of f for numbers from 1 up to 5; then make a list of all the lists of numbers that sum to 6; then take the value of f for each number in each list and write down those lists; then take the product of the numbers in each list and record those; and then sum all the products. This may take a while. In fact, for Americans it will entirely solve the problem of what to do with the long dull afternoons of the current four-day Thanksgiving holiday. Have a good one.

Same-sex Mrs. Santa: "the semantics are confusing"

Yesterday, the actor Harvey Fierstein announced in a New York Times Op-Ed piece that he would be riding in the Macy's Thanksgiving Parade dressed as Mrs. Santa Claus. The theme of the piece was same-sex marriage, and he wrote that "[i]f I really was Santa's life partner, you can believe that he would ask and I would tell about who has been naughty or nice on this issue." He closed by inviting readers to "remember to wave to me on my float. I'll be the man in the big red dress."

This apparently caused some controversy. After all, as Fierstein stressed in his opening, "Macy's Santa is the real deal." So I'm sure he expected to create some buzz by announcing that that "tomorrow, to the delight of millions of little children (not to mention the Massachusetts Supreme Judicial Court), the Santa in New York's great parade will be half of a same-sex couple."

According to an article in this morning's paper, Macy's (the store that sponsors the parade) quickly intervened to announce that "Santa Claus would be on the final sleigh float, accompanied by Mrs. Claus, a woman. Mr. Fierstein would be on a separate float." Macy's statement also 'emphasized that Mr. Fierstein would be dressed not as Mrs. Claus but as "his beloved character Mrs. Edna Turnblad of the Broadway hit musical `Hairspray.' " '

But then, the NYT says, "the actor's costume designer said that Mrs. Edna Turnblad, as portrayed by Mr. Fierstein, would be dressed as Mrs. Claus."

The costume designer, William Ivey Long, did however specify that the interpretation should only go two layers deep, not three. In the words of the Times article "those viewing Mr. Fierstein's costume would be expected to suspend their disbelief and see only Mrs. Turnblad dressed as Mrs. Claus, not Mr. Fierstein dressed as Mrs. Turnblad dressed as Mrs. Claus."

Mr. Long achieved this remarkable precision of interpretation by means of "a Balenciaga swing coat worn over a floor-length pencil skirt with a stamped red velvet jacket with fake fur collar and cuffs topped with a white fake fur French beret," adding that "those are just words. The effect is, of course, insane."

Macy's then issued a second statement, agreeing that Fierstein would be appearing "in Edna's interpretation of Mrs. Claus ... As for Mrs. Claus herself, she will be appearing with Santa on Santa's sleigh ..."

As Mr. Long is quoted as saying, "the semantics are confusing."

Long is clearly using semantics in the ordinary language sense of "what things mean," and I've got no problem with that (not that it would matter if I did). I was taught that semantics is about meaning as something that sentences have, whereas pragmatics is about meaning as something that people do. However, the field seem to be increasingly divided about where to draw the line, and even whether there is a line worth drawing; and meanwhile the world at large has long since decided that the fancy word for "(analysis of) meaning" is "semantics". So be it.

But I did wonder about the metaphor underlying Mr. Long's comment. I guess that it's "clothes are words" or "outfits are sentences" or something like that. And in this case, everyone is pretty clearly focusing on "wearer meaning" rather than "outfit meaning" -- along with an interesting political mix-in, somehow cancelling the most basic level of interpretation.

Anyhow, the point that interests me is that such metaphors usually work in the direction of understanding something more abstract in terms of something more concrete, but this is the opposite. At least, it's the opposite if you think that signifiers are more abstract than clothes. I guess that means it's a theory, not a metaphor. Though maybe it's neither one, but just a piece of terminology that Mr. Long once learned in a class on the semiotics of culture ...

Another thing that seems upside down here is the partial explicit cancellation of an expected meaning. In the familiar cases, it's always the superimposed layers of interpretation that are explicitly cancelled: "I have some aces; in fact I have all of them." But here, what is explicitly cancelled is what seems most basic: we're told to see Edna as Mrs. Claus, not Harvey as Edna as Mrs. Claus. Clearly confusing, even if not clearly semantics.

There is probably a whole literature about the Gricean implicatures of clothing, cancelled or otherwise. No doubt I could find it via google, but I'll wait for some reader to tell me. I've read Anne Hollander's Sex and Suits, but its semiotic analysis is merely implicit, and Grice is not in the index.

[Note: Geoff Pullum will not be pleased to see that Mr. Long interpreted semantics as a plural count noun. At least I think Geoff won't be: maybe he'll charitably construe Mr. Long's comment as involving one of the usage patterns in which mass nouns can be pluralized: "the semantics of Harvey Fierstein's Mrs. Santa outfit" like "the wines of France". As Stephen Maturin would have put it, "let us not be pedantic, for all love."]

[Another note: contemplating this whole story, I have to ask "is this a great country or what?" And now, back to Thanksgiving preparations!]

November 26, 2003

The AI gnomes of Zurich

With respect to an earlier Language Log piece on the Great Ontology Debate, Yarden Katz has drawn my attention to an anti-Shirky posting by Drew McDermott on the www-rdf-rules mailing list. McDermott ends with a zinger:

It's annoying that Shirky indulges in the usual practice of blaming AI for every attempt by someone to tackle a very hard problem. The image, I suppose, is of AI gnomes huddled in Zurich plotting the next attempt to --- what? inflict hype on the world? AI tantalizes people all by itself; no gnomes are required. Researchers in the field try as hard as they can to work on narrow problems, with technical definitions. Reading papers by AI people can be a pretty boring experience. Nonetheless, journalists, military funding agencies, and recently the World-Wide Web Consortium, are routinely gripped by visions of what computers should be able to do with just a tiny advance beyond today's technology, and off we go again. Perhaps Mr. Shirky has a proposal for stopping such visions from sweeping through the population.

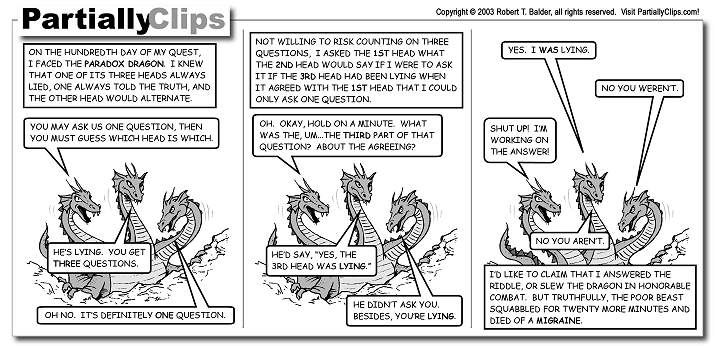

I like the image. It suggests an on-going feature, in which three low-level

employees at GnomeNet GmbH gossip about their bosses' latest Fiendish Plot

forthcoming product (this month:

Mindswap!). A cartoon format would be best -- I can't draw, but then

there's the Partially Clips approach...

As for the content, I don't think anyone (in this discussion) will admit to being opposed to vision. The thing is, some visions turn out to be the telephone or the automobile or the internet, while others turn out to be the Picturephone, the Personal Zeppelin™ or perhaps the Philosophical Language of John Wilkins. This is an argument for pluralism but against credulousness.

[Note: the Mindswap website led to me to these slides for the keynote address On Beyond Ontology at last month's ISWC conference, which I commend to the reader. I was especially happy to see that the Universal Marketing Graphic (UMG) is still in use (slide #3, "Approaching a Knee in the Curve"). I first saw a version of this graph used for speech technology market projections back around 1977. In those days, my colleagues used to label the horizontal axis in calendar years and the vertical axis in billions of dollars. Then someone pointed out that it was annoying to have to re-do the graphic every year, and suggested that the horizontal axis should be relabeled something like "...", "last year", "this year", next year", "...". Prof. Hendler (or perhaps the GnomeNet Marketing Department?) has generalized this further by removing the labels from both axes, so that anyone can now use the graph to illustrate an optimistic forecast about any aspect of the future of anything! ]

Lazy mouths vs. lazy minds

Captain John Dunn, of the Shreveport LA police department, is quoted by CNN as attributing the failure of the speech recognition technology in their new PBX to "Southern drawl and what I call lazy mouth".

I hope that I don't need to explain that on the face of it, this is nonsense. Message to Captain Dunn: the fault is in your system's technology, not in your citizens' mouths.

The general prejudice against southern varieties of English includes stereotypes about stupidity and backwardness that come out strongly when the context is technological. The most egregious example of this that I've seen was Michael Lewis's reporting for Slate from the Microsoft anti-trust trial.

Lewis seems not to have had much to say about the actual content of the trial. Instead, he devoted most of his dispatches to making extended fun of the participants' appearances and accents. Microsoft's lead attorney, John Warden, got the lead-off spot:

"Warden is a natural heavy, a great Hogarthian ball of pink flesh with jowls that ripple over his white, starched shirt. I don't think I could have placed his overripe drawl without the help of a potted biography (which says he grew up in Evansville, Ind.), except to say that it is Southern. It is also loud; Warden prefers to lean into the microphone and imitate the Voice of God. In any case, it didn't take him long to prove that technology doesn't sound nearly as impressive when it is discussed in a booming hick drawl. As he boomed on about "Web sahts" and "Netscayup" and "the Innernet" and "mode ums" he made the whole of the modern world sound a little bit ridiculous."

A bit later, David Colburn of AOL was given the treatment:

"He has stooped shoulders; short, dark hair; a runaway 5 o'clock shadow; and the economy of motion of a highly skilled hit man. His deadpan North Jersey dialect simply reinforces the general picture that if he is not dangerous himself, he knows people who are."

It turned out that Colburn is actually from Milwaukee, but factual accuracy about individuals is not precisely the point of this kind of stereotyping, is it? In fact, it's precisely not the point.

So this leads me to wonder what a police captain in Bayonne NJ would say about why the speech recognition technology in their new PBX doesn't work: "Hey, it's our deadpan North Jersey dialect -- the system just freezes up and connects everybody to some club in Lodi."

[CNN story via cannylinguist]

November 25, 2003

Ever heard a Chomsky sentence?

Which auxiliary in a declarative clause is the one that must precede the subject in the corresponding closed interrogative? In example (1) it's the first auxiliary, as can be seen from the grammatical interrogative in (2). (The auxiliaries are underlined and subscripted for reference, and "__" appears where the auxiliary would have been if it weren't before the subject.)

(1) This is1 the unit you will2 be delivering to me.

(2) Is1 this __ the unit you will2 be delivering to me?

If you choose the other auxiliary, the result is badly ungrammatical:

(3) *Will2 this is1 the unit you __ be delivering to me?

But "the first auxiliary" isn't the right answer. And a very important point about the learning of grammar hangs on this. Let me explain.

In the declarative clause (4), it is not the first auxiliary that is placed before the subject to make the interrogative.

(4) The unit you will1 be delivering to me is2 is simi lar to this one.

Using the first auxiliary would get you the disastrously ungrammatical (5).

(5) *Will1 the unit you __ be delivering to me is2 is sim ilar to this one?

Instead, it's the second auxiliary that you should choose. Putting that before the subject gets you the right result, namely (6).

(6) Is2 the unit you will1 be delivering to me __ similar to this one?

So the "first-auxiliary" rule is definitely wrong.

But how do we know this? How did we learn it? The correct rule is, as it happens, that it is whichever auxiliary is the one belonging to the main clause that must go before the subject. But how does a young learner ever find that out? What could convince a child who hit on the first-auxiliary rule that it is a mistake, only working accidentally in cases like (2) where the first auxiliary is the main clause auxiliary?

Well, we could learn that the first-auxiliary rule was wrong if we heard an example like (6). So for anyone who thinks we learn from the example of our parents and peers, it becomes an important question whether we ever do hear such examples.

Noam Chomsky does not believe that we learn most of our language from examples that we hear. He thinks much of the structure of human language is built into us at birth in some way ("innate"). And Chomsky has asserted firmly in numerous publications that we couldn't learn that the first-auxiliary rule was wrong. In one statement of the case he asserted that "A person could go through much or all of his life without ever having been exposed to relevant evidence" of the sort that (6) represents (paper and discussions recorded in Language and Learning: The Debate Between Jean Piaget and Noam Chomsky, ed. by Massimo Piattelli-Palmarini, Harvard University Press, Cambridge MA, 1980, p.40). However, he gave not one whit of empirical evidence supporting this confident assertion.

For convenience, let me refer to sentences with the property that (6) exhibits as Chomsky-sentences. Barbara Scholz and I have pointed out that it is not hard to find Chomsky-sentences in any text that one searches with any care, from newspaper prose to Oscar Wilde plays to Mork and Mindy scripts (see our paper `Empirical assessment of stimulus poverty arguments', The Linguistic Review 19 [2002], 9-50). But do they occur in spontaneous speech? Geoffrey Sampson, who believes people do learn purely from the evidence of hearing other people speak, suggests (in "Exploring the richness of the stimulus", The Linguistic Review 19 [2002], 73-104) that they don't -- and he thinks it is also the case that people never learn to produce Chomsky-sentences in speech. That is, he proposes that the rule about making interrogatives by placing the auxiliary before the subject is to some extent a rule of written English rather than spoken. (He even encountered, just once, a woman who attempted a Chomsky-sentence in spontaneous conversation, and she got it completely wrong: she was attempting to say Is what I'm doing worthwhile?, but what came out of her mouth was *Am what I doing is worthwhile?, completely ungrammatical.)

Whether Chomsky-sentences occur in spoken English is a real bone of contention, therefore. That is why I jumped as if stung by a bee when I was listening to the BBC World Service on December 7, 2001, at about 4:30 p.m. GMT and I heard a business reported doing an unscripted interview with a Swissair executive say:

(7) How radical are2 the changes you're1 having to make __?

That's a Chomsky-sentence. In declarative analogs like The changes you're1 having to make are2 so radical, the auxiliary of the main clause, are, is the one that has to be put up front before the subject (right after the fronted interrogative phrase how radical which begins the whole sentence). So much for the claim that you could live your whole life without hearing a Chomsky-sentence.

So are they common? Well, on February 2, 2002, I was listening to the BBC again, and I heard an interviewer doing an unscripted interview by satellite phone with a yacht race contestant, and the interviewer said:

(8) How sophisticated is2 the computer equipment you've1 got on board __?

That's another Chomsky-sentence in spontaneous speech. It raises the issue of whether perhaps both Chomsky and Sampson are wrong. Both my examples are from the BBC, and both are how questions; but how many more Chomsky-sentences are going past our ears all the time? And how many would it take to settle the question of whether it was possible for children to learn which auxiliary to front simply from examples of what they had heard? I have no idea. But the sentences in question don't have to be long and cumbersome like the ones above. The shortest Chomsky-sentence I've been able to construct is only four syllables:

(9) Is2 what's1 left __ mine?

Ever heard someone say that on seeing that there's just two slices of pizza left in the box? I have a feeling I may actually have said it myself on occasion. But I don't know.

It's actually scientifically important whether Chomsky sentences turn up in everyday speech, and if they do, how common they are. Keep your ears open, and make notes. I would love to see any accurately transcribed examples that you hear, written down with date and details of the speaker, and preferably witnessed independently by a third person who was there. You could send the examples to me by email. My login name is pullum and ucsc.edu is the domain.

(Forgive me for not including a mail-to link, but it would immediately be seized upon by foraging spambots who would send me unwanted messages about Viagra and toner cartridges.) This post was edited on Wed 26 Nov 2003 at about 09:45 PST. Among other things, the word "not" was invisible for about twelve hours in the sentence "Noam Chomsky does not believe that we learn most of our language from examples that we hear" -- because of a formatting error, not a belief error. That's a rather serious alteration in sense. Apologies. --GKP

At least he didn't answer

OK, I can't blame this on the paracingulate cortex.

No, maybe I can. This is a story about someone's cell phone going off inside his (closed) coffin at a remembrance service. It's said that "[s]ome of the relatives were so shocked they ran into the street."

Why? If the ringing phone had belonged to one of the live attendees, the others would have been annoyed, but not horrified to the point of running out of the building. But in this case, they found themselves starting to read the mind of a dead man, which is very creepy.

[Source: transblawg]

[Update 12/1/2003: this morning's BBC World Service news program, at the end of a discussion of the new British law against using mobile phones while driving, cited a similar story as a bit of colorful trivia -- the most inappropriate place ever heard of for a mobile phone call, or something like that. However, the reported introduced the event as happening "in Israel, actually." I couldn't find any indication (via google news) of such an event being reported from Israel. Was this (a) a simple mistake?, (b) an independent story that hasn't made it into google's index for some reason?, (c) the leading edge of an urban legend, placed in Israel because for the BBC, that is the default location for anything unpleasant?]

Understanding Complex Nominals

When I lived in Osaka I used to walk by a place whose sign identified it as the:

Higashi-ku Kyoosantoo Seikatsu Soodan Sentaa

East-ward Communist-Party Lifestyle Consultation Center

I puzzled for months as to what this might be. I was pretty sure that it was a center run by the Communist party in the East Ward for consultation about seikatsu, which means something like "way of life, lifestyle, livelihood". It didn't seem likely, for instance, that it was a center for consultation about the seikatsu of the East Ward Communist Party. But what sort of consultation about seikatsu might this be? Did the Communist party propose to help me decide whether to take up tennis? I eventually asked a friend who told me that it dealt with problems such as unemployment, marital discord, and alcoholism.

Incidentally, of the ten morphemes in this phrase, only one, "east", is native to Japanese. /sentaa/ is borrowed from English. All of the rest are loans from Chinese.

Querkopf Von Klubstick, Grammarian

Here is a little something that I found in the Complete Poetical Works of Samuel Taylor Coleridge.

I find it interesting that even heavy doses of laudanum and neo-Platonism couldn't reconcile Coleridge to (the stylistic extremes of) continental philosophy. It would be amusing to channel his appreciation of Jacques Derrida, for example.

In reading Coleridge's biography, I learned that he was apparently the inventor of the word selfless, though the OED's first citation is not until 1825, ten years after the date of this poem.

The following burlesque on the Fichtean Egoismus may, perhaps, be amusing to the few who have studied the system, and to those who are unacquainted with it, may convey as tolerable a likeness of Fichte's idealism as can be expected from an avowed caricature. [S. T. C.]

The Categorical Imperative, or the annunciation of the New Teutonic God, EGOENKAIPAN: a dithyrambic Ode, by Querkopf Von Klubstick, Grammarian, and Subrector in Gymnasio. ...

Eu! Dei vices gerens, ipse Divus,

(Speak English, Friend!) the God Imperativus,

Here on this market-cross aloud I cry:

'I, I, I! I itself I!

The form and the substance, the what and the why,

The when and the where, and the low and the high,

The inside and outside, the earth and the sky,

I, you, and he, and he, you and I,

All souls and all bodies are I itself I!

All I itself I!

(Fools! a truce with this starting!)

All my I! all my I!

He's a heretic dog who but adds Betty Martin!'

Thus cried the God with high imperial tone:

In robe of stiffest state, that scoff'd at beauty,

A pronoun-verb imperative he shone---

Then substantive and plural-singular grown,

He thus spake on:---'Behold in I alone

(For Ethics boast a syntax of their own)

Or if in ye, yet as I doth depute ye,

In O! I, you, the vocative of duty!

I of the world's whole Lexicon the root!

Of the whole universe of touch, sound, sight,

The genitive and ablative to boot:

The accusative of wrong, the nom'native of right,

And in all cases the case absolute!

Self-construed, I all other moods decline:

Imperative, from nothing we derive us;

Yet as a super-postulate of mine,

Unconstrued antecedence I assign,

To X Y Z, the God Infinitivus!'

1815

Parsers that count

A month ago, I cited the difficulty of parsing complex nominals like the one found on a plaque in a New Jersey steakhouse: "Volume Feeding Management Success Formula Award". We're talking about sequences of nouns (with adjectives mixed in as well), and the problem is that these strings mostly lack the structural constraints that parsers traditionally rely on.

When you (as a person or a parser) see a sequence like "A lapse in surveillance led to the looting" (from this morning's New York Times, more or less), you don't necessarily need to figure out what it means or even pay much attention to what the words are: "A NOUN in NOUN VERBED to the NOUN" has a, like, predictable structure in English, however you fill in the details. But "NOUN NOUN NOUN NOUN NOUN NOUN" is like a smooth, seamless block -- you (or the parser) can carve anything you please out of that.

One traditional solution is to look at the meaning. Why is it "[stone [traffic barrier]]" rather than "[[stone traffic] barrier]"? Well, it's because traffic barriers made of stone make easy sense in contemporary life, while barriers for stone traffic evoke some kind of science-fiction scenario. The practitioners of classical AI figured out how to do this kind of analysis for what some called "limited domains", and others called "toy problems". But this whole approach has stalled, because it's hard.

There's another way, though.

Here's a set of simple illustrative examples, taken from work in a local project on information extraction from biomedical text. (These examples come from Medline). Each of the four possible 3-element complex nominal sequences (with two nouns or adjectives preceding a noun) is exemplified in each of the two possible structures (one with the two leftward words grouped, the other with the two rightward words grouped).

[NN]N |

sickle

cell anemia 10561 2422 |

N[NN] |

rat

bile duct 203 22366 |

[NA]N |

information

theoretic criterion 112 5 |

N[AN] |

monkey

temporal lobe 16 10154 |

[AN]N |

giant

cell tumour 7272 1345 |

A[NN]

|

cellular

drug transport 262 746 |

[AA]N |

small

intestinal activity 8723 120 |

A[AN] |

inadequate

topical cooling 4 195 |

And the numbers? The numbers are just counts of how often each adjacent pair of words occurs in (our local version of) the Medline corpus (which has about a billion words of text overall). Thus the sequence "sickle cell" occurs 10,561 times, while the sequence "cell anemia" occurs 2,422 times.

Most of the time, in a 3-element complex nominal "A B C", you can parse the phrase correctly just by answering the question "which is commoner in a billion words of text, "A B" or "B C"?

In a crude test of 64 such sequences from Medline (8 of each type in the table above), this method worked about 88% of the time.

Actually, this is an underestimate of the performance of such approaches. In the first place, the different sequence types are not at all equally frequent, nor are the parsing outcomes equally likely for a given sequence type. Thus in the Penn Treebank WSJ corpus (a thousand times smaller than Medline, and much less infested with complex nominals, but still...) there are 10,049 3-element complex nominals, which are about 70% right-branching ([A [B C]]) vs. 30% left-branching ([[A B] C]). More information about the part-of-speech sequence or the particular words involved gives additional leverage. And other counts (such as the frequency of the individual words, of the pattern "A * C", etc.) also may help. There are also more sophisticated statistics besides raw bigram frequency (though in this case the standard ones, such as ChiSq, mutual information, etc., work slightly worse than raw counts do).

Yogi Berra said that "sometimes you can observe a lot just by watching". The point here is that sometimes you can analyze a lot just by counting. And while understanding is hard, counting is easy.

November 24, 2003

Conversational game theory: the cartoon version

Deadlock: a funny

exploration of why the logic of communication is hard, illustrating

the thought processes of an interpersonally-sensitive Asperger's sufferer

adult male human.

Like other actions, communicative choices have consequences. As shown in this strip, it's really hard to work out what choice leads to the best outcome a few moves down the road, especially when the other participants may not even be playing the same game.

This reminds me of a visit to SRI in the mid-70's, where I saw a demo of a principled conversational system. As I recall, my host typed in his conversational opening, and then we went to lunch while their KL10 churned away, trying to prove a theorem about what the optimal response to "hello" might be. I think we got back well before the machine had calculated its next move. This experience left me with a a completely illogical feeling that the machine, clueless and ungrounded as it was, still somehow really meant what it said, purely by virtue of the effort that it appeared to put into choosing its responses. But I also acquired another (more rational?) conviction: as a metaphor (or a system design) for on-line control of conversation, it would be better to pick a stochastic finite automaton instead of a theorem-prover. It might not do the right thing, but it would do something. A still more plausible conclusion, however, might be that no one had (has?) yet invented a formalism that does a good job of modeling human communication.

[via johnny logic].

blog wins 2003 ADS "word most likely to succeed" award

Margaret Marks at Transblawg points out a site where Swiss people can vote for "Wort des Jahres (word of the year) and Unwort des Jahres (antiword of the year)". The site archive has results back to 1977 (for Germany), when #2 (of six Wort des Jahres winners) was "Terrorismus, Terrorist". Starting in 2002, the program appears to have spread to Austria and Lichtenstein, and now to Switzerland. Dr. Marks explains Lichtenstein's intriguingly petty #3 winner for 2002, Senfverbot "mustard ban". Mark Twain would have appreciated the 1999 Deutschland tenth-place winner Rindfleischetikettierungsüberwachungs-aufgabenübertragungsgesetz.

The American Dialect Society has a "Words of the Year" contest. Unfortunately it seems only to go back to 1990, so we can't compare lexicographic terrorism awareness across the Atlantic in 1977.

But like the Academy Awards, the ADS contest has categories, of which the most interesting to me is "most likely to succeed". Winners in this category since 1990 have been notebook PC, rollerblade, snail mail, quotative "like", [not awarded?], world wide web, drive-by, DVD, e- , dot-com, muggle, 9-11, 9-11 [winner two years in a row?!], and [in 2003] blog. Take that, John Dvorak!

The ADS vote was tallied back in January, so it is not exactly a news flash, but I missed it at the time :-). A search for "American Dialect Society word of the year" at technorati.com produces only an error page telling me that the search result "does not appear to have any style information associated with it." Indeed, alas...

[Update 12/1/2003: Grant Barrett, the webmaster for the American Dialect Society, has brought to my attention the fact that I misread their webpage: 9-11 won just once, in the January 2002 vote for "word most likely to succeed" from the year 2001. The list given above become correct, I think, if the second occurrence of 9-11 is deleted.]

November 23, 2003

Memo to self

Before activating time machine, memorize recipe for gunpowder and re-read A Connecticut Yankee in King Arthur's Court.

It has wrinkled feet

English speakers, or at least English-speaking linguists, are thoroughly used to the idea of loanwords. English has many thousands of words borrowed from French and Latin, and sizable numbers from other languages; and many or most other languages also do a lot of borrowing. So it's a surprise to find that some languages have few loanwords.

A `no-borrowing' strategy is shared by many Native American languages, at least as far as borrowings from the colonial languages English and French are concerned. Montana Salish is typical of languages of the US Northwest in this respect: it has virtually no English loanwords and only a handful from French (most of which it probably got from other Native languages, not directly from French).

So what do Montana Salish speakers do when they acquire something new from the dominant Anglo culture? What they do is invent words for new things, using materials that are already present in their own language. My favorite example is the word for `automobile', which is p'ip'uyshn -- literally, `it has wrinkled feet', a word that was obviously inspired by the appearance of tire tracks and/or of the tires themselves. And this word is not peculiar to Montana Salish. The same basic formation is found in two Salishan languages that are closely related to Montana Salish: in Coeur d'Alene the word literally means `thing with wrinkled paws' (according to Dale Sloat, via Julia Falk), and Moses-Columbia has k-p'ip'uyxn for `automobile', beside an English loanword, 7atmupil ( Dictionary of the Moses-Columbia language , compiled by M. Dale Kinkade, 1981).

There's a puzzle here: did speakers of these three languages independently come up with the same metaphor to designate `automobile', or was the word invented in one language and then borrowed (with appropriate phonetic changes according to a borrowing routine) into one or both of the others? I wish I had an answer to this question, but I don't.

Trees spring eternal

Trees can be trouble. Over the past month, this blog has seen issues with hypothesized tree structures in semantics (ontology), pragmatics (discourse structure) and syntax. We haven't discussed questions about tree-asserting hypotheses in morphology, phonology and phonetics, but believe me, they're out there.

It seems to be natural for human analytic efforts to produce tree-structured ideas, typically as a result of recursive subdivision of phenomena, whether subdivision of a string of tokens or of a set of entity types. For some naturally-occurring time series (linguistic and otherwise) and for natural kinds of plants and animals, this really works -- tree theories can be an efficient and effective way to organize rational investigation, whether or not they are scientifically valid. This record of success, I think, has reinforced the "things are trees" idea over many millennia of hominid inquiry into nature. A believer in evolutionary psychology might even suppose that our brains have learned to think that things are trees, genetically as well as memetically.

Of course, scientists often find that things are not trees, or at least not exactly. However, non-tree-structured hypotheses are not intrinsically any more likely to be correct. There's a fascinating case in the history of biology, which I learned about some years ago from one of the best books that I ever found in a remainders bin.

Linnaean taxonomy, which classifies all living things into a hierarchy, was developed in the 18th century, but its explanation in terms of Darwin's "descent with modification" did not emerge until more than a century later. In fact, as early 19th-century biologists delved further into the structures and lifecycles of invertebrates from around the world, several of them thought that they saw empirical evidence for non-tree-like patterns of relationship among such creatures. One of these was Thomas Henry Huxley, later famous as a promoter of Darwin's theories.

Darwin went off "botanizing" on the Beagle from 1831-1836 and came back with the evolutionary tree -- descent with modification -- as a new semantics for the Linnaean syntax. But he didn't publish his ideas until 1859. Meanwhile, Huxley went off botanizing on the Rattlesnake from 1846-1850 and returned with a theory of circles of affinity inter-related by parallel cross-links of analogy, as exemplified in this diagram

reproduced from Mary Winsor's fascinating book Starfish, Jellyfish and the Order of Life.

I get the impression that what appealed to Huxley about this "circular theory" (which was inspired by the earlier "Quinary theory" of William Sharp MacLeay) was precisely that it was so different from the common-sense hierarchy of natural kinds, and therefore looked like a real discovery. As a linguist, I'm familiar with the perspective that values "tension between common sense and science."

But Huxley also wrote that

The Circular System appears to me to stand in the same relationship to the true theory of animal form as Keplers Laws to the fundamental doctrine of astronomy--The generalization of the Circular system are for the most part, true, but they are empirical, not ultimate laws---

That animal forms may be naturally arranged in circles is true -- & that the planets move in ellipses is true -- but the laws of centripetal and centrifugal forces give that explanation of the latter law which is wanting for the former. The laws of the similarity and variation of development of Animal form are yet required to explain the circular theory -- they are the true centripetal and centrifugal forces in Zoology.

(Newton's account of Kepler's Laws depends on the single force of gravity, not paired centripetal and centrifugal "fictitious forces", doesn't it? .. but anyhow,) Huxley has the idea that a hypothetical pattern in nature should not simply be accepted (on aesthetic grounds, or as a glimpse of the mind of God), but rather should be given a causal explanation in terms of the dynamics of some simple process. And when he saw that "descent with modification" (with a bunch of other assumptions!) could provide exactly such an explanation for a tree-structured taxonomy of biological species, Huxley immediately abandoned circles for trees.

In linguistics, we can find some similarly fundamental causal arguments for tree structures in terms of the dynamics of basic processes: recursive concatenation in composition; stack discipline in processing; descent with modification in history. But just as in the case of natural kinds in biology, the argument from these basic processes to the structure of real-world phenomena requires lots of extra assumptions. And may be wrong.

November 22, 2003

Language fu, the cartoon version

Well, just one more. I like the subtle code-switching.

Dynamic and epistemic logic: the cartoon version

With serious but fun stuff here, for the brave and/or curious.

OK, I'll stop now.

"Whole Language", the cartoon version

Fair enough. Also funny.

More on Whole Language, if you care (and you should!). Warning: not funny.

like is , like, not really like if you will

Geoff Pullum argues that val-speak "like" is like old-fogey "if you will." His case is cogent as well as entertaining.

But based on the examples and analysis in Muffy Siegel's lovely paper "Like: The Discourse Particle and Semantics" (J. of Semantics 19(1), Feb. 2002), I want to suggest that Geoff is, like, not completely right.

Muffy supports and extends the definition of (this use of) like due to Schourup (1985): "like is used to express a possible unspecified minor nonequivalence of what is said and what is meant". And I agree with Geoff that there are several widely-used formal-register expressions with more or less the same function: "if you will", "as it were", "in some sense", etc.

So far so good. However, Muffy's article also supports two differences between "like" and "if you will".

First, some of her examples (taken from taped interviews with Philadelphia-area high school students) suggest a quantitative difference:

She isn't, like, really crazy or anything, but her and her, like, five buddies did, like, paint their hair a really fake-looking, like, purple color.

They're, like, representatives of their whole, like, clan, but they don't take it, like, really seriously, especially, like, during planting season.

In these two examples, 8 discourse-particle likes get stuck in among a mere 38 non-like words -- roughly one like every 5 words. It's hard to translate this into fogey-speak:

?They're representatives, if you will, of their whole clan, if you will, but they don't take it really seriously, if you will, especially during planting season, if you will.

Whatever the whining old fogeys may say, I think it's this tic-tock frequency that bothers them. I once had a colleague who used the word literally similarly often: "Now, literally, look at the first equation, where, literally, the odd terms of the expansion will, literally, cancel out..." It (was one of several things about this guy that) drove me nuts. If all middle-aged telecommunications engineers started talking that way, I'd get in line behind William Safire to slam them for it. (By contrast, the overuse of like by young Americans seems quaint and charming to me, probably because I like the speakers better.)

There's a second difference between like and if you will to be found in Muffy's paper. She documents a number of semantic effects of like, such as weakening strong determiners so as to make them compatible with existential there:

(38) a. *There's every book under the bed.

b. There's, like, every book under the bed. (Observed: Speaker

paraphrased this as 'There are a great many books under the

bed, or the ratio of books under the bed to books in the rest

of the house is relatively high.')

(39) a. *There's the school bully on the bus.

b. There's, like, the school bully on the bus. (Observed: Speaker

paraphrased this as 'There is someone so rough and domineering

that she very likely could, with some accuracy, be called the

school bully; that person is on the bus.')

Try this in fogey-speak: "there's every book under the bed, if you will". Like, I don't think so.

No, like is definitely a more powerful (and useful) expression than if you will. Perhaps that's why some people use it, like, too much?

[Note: Muffy Siegel's paper doesn't discuss these specific alleged differences (between "like" and other hedges), which were inspired by her analysis but are not her fault.]

[Update 11/23/2003: Maggie Balistreri's Evasion-English Dictionary provides some amusing and relevant entries for like, though lexicographers might quibble about the sense divisions as well as the assignment of examples to senses. Well, anyhow, if I were a lexicographer, I would :-)... And here she is being interviewed on NPR, expressing the perspective that Geoff Pullum complained (like, validly) about.]

Bad Writing and Lord Lytton

Mark Liberman's post on bad writing made me think of the Bulwer-Lytton Fiction Contest. Edward Bulwer-Lytton seems always to come to attention these days as the epitome of bad writers, the author of the infamous passage:

It was a dark and stormy night; the rain fell in torrents - except at occasional intervals, when it was checked by a violent gust of wind which swept up the streets (for it is in London that our scene lies), rattling along the housetops, and fiercely agitating the scanty flame of the lamps that struggled against the darkness. (Paul Clifford, 1830)

Personally, I don't think that this passage is so bad - I like it. It seems to me that people have lost the ability to appreciate complex style. But in any case, as a student of the native languages of British Columbia, I'd like to point out that Lord Lytton played a more important role in history. In 1858 and 1859 he served as Colonial Secretary in Lord Derby's government, in which capacity he gave instructions to Sir James Douglas, governor of the colonies of Vancouver Island and British Columbia regarding the conduct of relations with the indigenous peoples of the two colonies. (The correspondance between Lord Lytton and Sir James may be found in British Columbia Papers Connected with the Indian Land Question 1850-1875 (Victoria: The Government Printer, 1875).)

These instructions have figured in several ways in recent litigation over aboriginal rights in British Columbia. On the one hand, the Supreme of Canada in Calder v. Attorney-General of British Columbia (7 C.N.L.C. 91 (SCC)) cited his letters as evidence of delegation of power by the Crown to the colonial government (a position criticized by Bruce Clark in his important but controversial book Native Liberty, Crown Sovereignty at p. 64). On the other hand, his instructions give rather clear evidence that the Crown recognized aboriginal title and took the position that it could only be extinguished with the formal consent of the Indians. This evidence is of some importance since there has been long-standing controversy as to whether the Royal Proclamation of 1763 applied to British Columbia.

The colonization of British Columbia led rapidly to the loss of the indigenous languages. Three languages are already extinct; almost all of the remaining 33 are dying.

It's like, so unfair

Why are the old fogeys and usage whiners of the world so upset about the epistemic-hedging use of like, as in She's, like, so cool? The old fogeys use equivalent devices themselves, all the time. An extremely common one is "if you will". Semantically it does exactly what like does. Let me explain.

Look at these synonymous pairs:

The evidence I think will show that of the total amount of money raised from private sources, and from profits or increases in markup, if you will, on the sale of U.S. weapons to Iran, that a relatively small percentage of that money went to the Contras.

The evidence I think will show that of the total amount of money raised from private sources, and from profits or increases in, like, markup on the sale of U.S. weapons to Iran, that a relatively small percentage of that money went to the Contras.

The baboon that's best at coping with stress is the one that seeks emotional backing from other baboons (support groups, if you will), the researchers found.

The baboon that's best at coping with stress is the one that seeks emotional backing from other baboons (like, support groups), the researchers found.

And the bland assumption that all cartoons are childish or trivial is itself, if you will, a cartoon version of "cartoon."

And the bland assumption that all cartoons are childish or trivial is itself, like, a cartoon version of "cartoon."

"We were willing to overlook it, if you will, being a growth company."

"We were willing to, like, overlook it, being a growth company."

I think it's a reason we've done well; part of our mystique, if you will.

I think it's a reason we've done well; part of, like, our mystique.

They are, if you will, this country's governing body.

They are, like, this country's governing body.

There is also a potential source of shenanigans, if you will.

There is also a potential source of, like, shenanigans.

In each case, the first sentence is a quote from The Wall Street Journal. They mostly appear to be quotes from educated and prosperous middle-aged persons — CEOs and so on. The second sentence in each pair is my translation into the style of younger speakers.

When people who think the English language is going to hell in a handcart cite phenomena like this use of like as their evidence, things are going a bit too far. Like functions in younger speakers' English as something perfectly ordinary: a way to signal hedging about vocabulary choice -- a momentary uncertainty about whether the adjacent expression is exactly the right form of words or not. If the English language didn't implode when if you will took on this kind of role among the baby boomers, it will survive having like take on an extremely similar role for their kids. The people who grouse about like are myopic old whiners who haven't looked at their own, like, linguistic foibles, if you will.

Stalinist Linguistics

Mark Liberman's mention of Stalinist linguistics might give rise to the inference that Stalin had a distinctive approach to linguistics associated with his "left-fascist" politics. Actually, the distinctive, indeed bizarre tendancy in Soviet linguistics was due to N. Ja. Marr, who was to Soviet linguistics what Lysenko was to Soviet biology. Among Marr's stranger claims is that all the words of all human languages are descended from the four proto-syllables sal, ber, yon, and rosh.

Stalin's paper Marksizm i Voprosy Jazykoznanija [Marxism and problems of linguistics] is a refutation of Marr. Although Stalin cannot be said to have made any new and profound contribution to linguistics, he actually did have some knowledge of linguistics and his views were quite mainstream.

For a detailed account of Marr and his role in Soviet linguistics, see Jan Ivar Bjornfløten's book Marr og Språkvitenskapen i Sovjetunionen (Oslo: Novus Forlag. 1982.)

November 21, 2003

Phineas Gage gets an iron bar right through the PP

On September 14, 1848, the Free Soil Union in Ludlow, Vermont, carried a news item that began:

As Phineas P. Gage, a foreman on the railroad in Cavendish, was yesterday engaged in tamping for a blast, the powder exploded, carrying an iron instrument through his head an inch and a fourth in circumference, and three feet and eight inches in length, which he was using at the time. [from a scan on Malcom Macmillan's Phineas Gage information page]

I happened to read this item a couple of days ago while preparing a lecture on emotion for Cognitive Science 001. It reminded me of something that I left out of my earlier post on crossing dependencies in discourse structures: within-sentence syntactic relationships also often tangle.

To understand the phrase "carrying an iron instrument through his head an inch and a fourth in circumference" as the writer intended us to, we have to recognize that the inch-and-a-quarter measurement modifies the iron, and not Phineas' head -- which is in the way in this sentence, just as it was on that September day in 1848.

It's fair to consider this an unhappy stylistic choice. On the other hand, folks sometimes write this way, and they talk this way even more often (and often the results are not so likely to be mentally red-penciled by the audience). In some languages, and some registers of English, syntactic tangling like this is normal. In fact, the only thing that's really troublesome in the Gage example is that 'which' struggling to swim upstream to 'instrument' ...

Tangling of surface syntactic relations is certainly not a new discovery. Among recent treebanks, the German TIGER corpus project's "syntax graphs" permit crossing edges, and so does the analytical level of the Prague Dependency Treebank (where crossing relations are called "non-projectivity").

Of course, different frameworks of syntactic description, and different theories about how to explain them, offer different stories about what such apparently crossing relations really are, how they arise, how to think about them. This is the source of many of the non-terminological differences among approaches to syntax. Are the issues in tangling discourse-level relations the same, or partly the same, or entirely different?

November 20, 2003

The emperor and the dialect speaker

Two tidbits that I came across in an old file of miscellaneous linguistic stuff today, while I was looking for something else:

1. Sigismund (1361-1437), Emperor of the Holy Roman Empire, gave this answer to a prelate who, at the Council of Constance in 1414, had objected to His Majesty's grammar:

"Ego sum rex romanus, et supra grammaticam."

2. A Croatian dialect saying (rendered in very rough English-based spelling, without the Croatian diacritics, some of which are hard to render on line):

Kuliko jezikou chlovig zna,

Taliko chlovig valja.

Which translates to:

However many languages a person knows,

That's how much that person is worth.

Right-justified fixed-width raw text, no padding

It is possible to construct raw English text that has a justified right margin without employing any of the space padding that is used by old formatting programs like nroff that were designed to fake right justified text using daisy-wheel printers and fixed-width fonts reminiscent of typewriters. To show that it is not a problem to do this, I offer this example (and if your browser doesn't show this as right-justified, you are using an insane font setting -- your fixed-width font default must be a non-fixed-width font or something). As Mark Liberman has pointed out in connection with a message I recently sent him that had this property, a problem in recreational computer science is suggested by the possibility of right-justified raw text: write a program that takes raw text paragraphs as input and produces right-justified versions of them, respecting certain tolerances and line-length preferences, using transformations that preserve rough synonymy, varying optional punctuation and substituting synonymous word sequences as necessary but never adding extra spaces.

A shitload more brevity

In Geoff Pullum's brief post about (one of) the Gricean maxims, he makes a good point. It's tough to blog briefly. Which makes me, as a new wet behind the ears apprentice underblogger, wonder just what the rules of Blog really are.

The beauty of Grice's maxims is that they seem a priori obvious. They tell us to be as informative as necessary but not more so, to convey true beliefs justified by adequate evidence, to be relevant, and to be (cutting a longer story short) brief. Just plain old common sense, right? But at least since Keenan (1974) linguists have wondered whether the maxims apply universally, and independently of culture, style and genre.

Think about bloggers, who as Geoff shows us by anti-example, are typically none too brief. In a blog, be relevant seems strangely not and standards of evidence are not in evidence. Besides, just how much of the blogosphere's great outpouring of cyber-information is truly necessary?

Grice justified his maxims as being special cases of one supermaxim - the Cooperative Principle. "Make your contribution such as required, at the stage at which it occurs, by the accepted purpose or direction of the talk exchange in which you are engaged." Herein lies the root of the problem. In the case of blogs, uncertainty over audience make-up and mores reaches a new high. You could be anybody. In fact, many of you are not bodies at all, but automated web-crawlers. And there simply is no commonly accepted purpose or direction. Bloggers are free to make up purposes and directions as they go, to inform as much as they like about whatever they like in pretty much any way they like. A young underblogger's apprentice does not (intentionally) stray far from standard purposes and directions, and hence conventions. But deeper in blog-space, anything might go. Who is to say whether bloggers follow these rules, or these...

|

The Maxims of Blog

Maxim of Enlightenment: 1. Bring enlightenment. Maxim of Controversy: 1. Be controversial. (Occasionally say what you are certain is true. It adds credibility.) Maxim of Digression: Digress. (Especially (auto)biographically. Note that Gorky was born Aleksey Maksimovich Peshkov, and "Maxim" derives from his Father's name, the "-ovich" being a patronymic ending. Thus does one Maxim beget another. Hopefully, more on Russian and other naming conventions in a later log. And perhaps someone more literary or political than I will have something to say about "the father of Soviet literature and the founder of the doctrine of socialist realism," and the reason Nizhny Novgorod was for many years hard to find on a map. The Nizhny Novgorodites are still proud of Gorky as far as I can tell, but not enough to have their city bear his name. (Beaver, Utah is not named for me (or vice versa (note the embedded parenthetical - these are good)), but it is apparently the birthplace of Butch Cassidy, ne Robert LeRoy Parker. So why "Butch"? Well, he once worked as a butcher. His most famous partner in crime (aka Harry Longabaugh) was nom de guerred in a reverse Gorky manoever: as a young horse rustler the Kid spent two years in jail in Sundance, Wyoming. Not much going on in my name, except that Beaver is supposedly a case of very poor translation by English officials helping my ancestors anglicize their Polish family name, "Kaczka", which means "duck". David Duck.)) Maxim of Entropy: 1. Hyperlink obscure expressions. |

Keenan, Elinor O. 1974. "The Universality of Conversational Postulates." Studies in Linguistic Variation, ed. Ralph W. Fasold and Roger W. Shuy (Washington, D.C.: Georgetown Univ. Press), pp. 255-68. (Back)

Edward Sapir and the "formal completeness of language"

Camille Paglia recently slammed the blogosphere for "dreary meta-commentary," a "blizzard of fussy, detached sections nattering on obscurely about other bloggers," lacking the relevance to "major issues and personalities" of Paglia's own writing. So I warn you that we're about to go meta for a few lines. But when we re-emerge into normal space, the nattering blizzard safely behind us, we'll be within sensor range of some "major issues and personalities." Major issue: the formal completeness of language. Major personality: Edward Sapir.

Language Hat pointed to John McWhorter's piece on Mohawk Philosophy Lessons and my follow-up on Sapir/Whorf. In a comment on Language Hat's post, Jonathan Mayhew wrote:

Doesn't the fact that we can discuss certain differences between languages in a single language indicate that the Whorf-Sapir hypothesis is flawed? That is, I can use English to describe Hopi thought patterns. Thus language would be more malleable, not determining thought, but elastically adapting to changes in thought.

In response, being lazy pressed for time, I'll cut and paste

question 2.2 from the final

exam for my intro linguistics course in the fall term of 2000:

The American linguist Edward Sapir wrote in 1924:

The outstanding fact about any language is its formal completeness ... To put this ... in somewhat different words, we may say that a language is so constructed that no matter what any speaker of it may desire to communicate ... the language is prepared to do his work ... The world of linguistic forms, held within the framework of a given language, is a complete system of reference ...

What would it mean for this to be false? What does it

mean if it is true? How can you square this quote with

the fact that Sapir is also associated with the Sapir-Whorf hypothesis,

crudely expressed as the slogan "language

determines thought," or more precisely expressed by Sapir as:

We see and hear and otherwise experience very largely as we do because the language habits of our community predispose certain choices of interpretation ...