October 31, 2006

If they do it too much, they should be told not to do it at all

Yes, I know: stated so baldly, this zero-tolerance policy (ZT-1) sounds extreme, not to mention unlikely to be effective unless constantly and severely enforced. Nevertheless, advisers on style sometimes end up following ZT-1, and also the related policy

ZT-1 is one of the factors that leads to the proscription Avoid Passive; student writers use passive clauses more than their teachers think they should, so they're told to avoid them wherever possible. ZT-2 is one of the factors that leads to the proscription Avoid Pronouns; student writers (well, actually, all writers) occasionally produce sentences with unclear or incorrect pronominal reference, so some teachers, remarkably, tell them not to use pronouns.

Recently it occurred to me that ZT-1 might be part of the history of what I have come to call Garner's Rule (after Bryan Garner, who is its most vigorous current exponent), proscribing sentence-initial linking however, as in "You may bring paper to the exam. However, you are allowed to bring only one page."

First, rather a lot of background about Garner's Rule. (And an acknowledgment: what I'm saying here represents joint work by me and Douglas Kenter.)

Garner gives a full statement of the rule in his 1999 book of advice for legal writers, The Winning Brief:

Replacing however by but is what he recommends first (and most often), so from here on I'll use "Garner's Rule" to refer to the stronger form:

Garner's Rule has a long history, going back at least to -- wait for it -- William Strunk Jr.'s 1918 Elements of Style, which says (as Mark Liberman and Geoff Pullum noted on Language Log a while back) sternly and uncompromisingly:

(The proscription is softened some in the later Strunk & White version: "The word serves better when not in first position.")

Garner's The Winning Brief assembles advice from over a hundred years that variously recommends using discourse connectives in general (certainly good advice), proscribes sentence-initial however, explains this proscription, argues that there's nothing wrong with beginning sentences with but (keep this in mind), and recommends a fix for sentence-initial linking however (usually either replacement by but or moving however inside the sentence).

So you're asking: What's wrong with sentence-initial however? Garner tells us, in his 1998 Dictionary of Modern American Usage, that it

The objection is aesthetic, a matter of personal taste and judgment. Garner finds sentence-initial however "ponderous" and "unemphatic", and a number of other writers agree with him -- for instance, Lucile Vaughan Payne, in her 1965 The Lively Art of Writing (cited by Garner in The Winning Brief), who disarmingly admits that it's all rather mysterious:

(Note the reference to student writers. I'll come back to that.)

However, still other writers have different tastes and (rightly) object to having their judgments dismissed by airy assertions about what sounds good, ponderous, or (un)emphatic. (I'm not much of a user of sentence-initial however myself, as I noted in an earlier posting, but I see no reason to impose my personal style on other people.)

Where do these aesthetic judgments come from? Mark and Geoff suggested that Strunk's stylistic preferences came from the writing he was exposed to as a young man. This makes sense; you develop your sense of style from the models around you.

You also develop your sense of style from explicit teaching and advice. Once a proscription against sentence-initial however was articulated, it had a life of its own and could be passed from one generation of writers and teachers, in communities of stylistic practice, to the next. Like other fashions in taste, it diffuses.

Is diffusion a sufficient explanation for the stylistic tastes of Garner and others? Maybe so, but I can think of two other factors that might contribute to a dispreference for sentence-initial however. Before I discuss them -- yes, we're going to get back to ZT-1 -- I want to take up, and dismiss, another argument against sentence-initial linking however that I've seen in net discussions: that it introduces an ambiguity.

The argument goes as follows: when you read a sentence beginning with however, or hear one, you don't know whether this is linking however or concessive however (as in "However you got that dog, you can't keep it" or "However many times you tell me that, I won't believe it"). So the sentence so far is ambiguous. On the other hand, initial but would be unambiguous. [Update: Bruce Rusk writes to point out that even this but is (temporarily) ambiguous, thanks to the fact that there's an exclusionary prepositional idiom but for 'if it weren't for the existence of', as in these contrasting pairs: "Writing well is not easy. But for grammarians it is impossible." (contrastive coordinating but) vs. "Writing well is not easy. But for grammarians, it would be impossible." (exclusionary prepositional but for). Potential ambiguity is everywhere.]

This is an extraordinarily silly argument. To start with, as long as the writer is punctuating properly, sentence-initial linking however is unambiguously signaled by a following comma, and in speech it's usually associated with the prosody that the written comma indicates. There's no ambiguity even at the first word. Then, once you get past the first word, any unsureness on your part as to which however was intended is quickly eliminated by the following material.

I believe that ambiguity-avoidance arguments for particular stylistic choices are always flawed, but this one is particularly lame, since a huge proportion of sentences begin with words whose identity can be determined only when the sentence is continued: "he's" could be "he is" or "he has", "that" could be a complementizer or a demonstrative, "is" could be copular be or the be of the progressive or the be of the passive, "later" could be an adjective or an adverb, and so on, endlessly. If we objected to this sort of local indeterminacy for a "however", we would be objecting to almost everything.

Now to two considerations that actually might contribute to a feeling against initial however.

The first consideration is a kind of "division of labor" argument for initial but over however. Here's how it goes:

2. The linker however can occur in either place.

3. The labor of signaling contrast could then be divided between the two linkers if however was restricted to sentence-internal position: but only sentence-initially, however only sentence-internally.

Ok, class, where have we seen this argument before? Yes, in Fowler's famous suggestion (we've now posted so much on the That Rule, or as I now prefer to call it, Fowler's Rule, that I hardly know which posting to link to, but here's one of my favorites) that the labor of signaling relative clauses might be divided between that and which:

2. The relativizer which can occur in either place.

3. The labor of signaling relatives could then be divided between the two relativizers if which was restricted to nonrestrictive relative clauses: that only in restrictives, which only in nonrestrictives.

Perfectly parallel reasoning in the two cases. If you're the sort of person who likes the division-of-labor argument for Fowler's Rule -- and a great many people do -- then you should also like that argument for Garner's Rule. I've never really understood why anyone would want to trade in variation for complementary distribution, so I don't buy the argument in either of these cases (or any others). But tastes evidently differ.

Finally, we get to ZT-1. Recall Payne's remark that student writers (she means, for the most part, college student writers) "invariably" put however in initial position. Other writing teachers have remarked to me that their students are very fond of however as a discourse connective, in particular as a marker of contrast, and that they almost always put it in initial position, and my own experience teaching accords with these observations. We could, of course, be wrong; possibly no one has studied the matter systematically, just because everyone is pretty sure what the facts are.

In any case, there seems to be a general belief among writing teachers that college students overuse initial however. This would lead them to be prejudiced against it and, in some cases, to advise their students not to use it at all. That's an instance of ZT-1.

I'll leave for a follow-up posting the question of why college students might like the discourse connective however so much -- there's a delicious irony in there -- and why they prefer it in initial position. If you are a college student yourself, you might think about your own practice and the reasons for it. If you have college students handy, you might ask them.

For now, I'll content myself with a few comments on zero tolerance policies, in general and with respect to stylistic choices.

Zero tolerance policies can be found many places: Alcoholics Anonymous and school drug policies, for example. They require enforcement, either informal (AA) or institutionalized (school drug policies), and it's not clear how effective they are in eliminating the targeted behavior. In the case of stylistic choices, the goal should really be not to eliminate one of the choices, but only to reduce its use, either in sheer frequency (in favor of a greater variety of choices) or in potentially problematic situations. ZT-1 and ZT-2 are overkill.

They are also almost surely ineffective, at least if the goal is to get the students writing clear, smooth, interesting prose. One easy response to a prohibition against initial however is to follow the strong form of Garner's Rule: whenever you find yourself tempted to begin a sentence with linking however, replace it with but. The result is that overuse of however becomes overuse of but. To my mind, this is no advance (and I'm a big but-user). Another easy response is to just delete the however, thereby much reducing the number of explicit discourse connectives, certainly not a result we want.

There are techniques that could be effective. A piano student who's inclined to overuse the sustain pedal -- it can cover a lot of finger sins -- might be told to play some pieces without the sustain pedal, either a few times or for some period, after which the sustain pedal is reintroduced. Similarly a sports player who's inclined to favor one particular move very heavily might be told not to use it, either for one practice or for some period, after which the ban is lifted. The aim is to expand a repertoire.

This could easily be done in writing classes: not a lifetime ban on initial however, but a short-term ban, during which alternatives are offered, perhaps even required: "Your essay must have at least two occurrences of sentence-initial but, two of sentence-internal however, and two occurrences of other discourse connectives". Writing teachers already give assignments that make the students follow special rules, requiring or prohibiting particular bits of form or content, either in their own writing or in editing material provided by the teacher. The discourse adverbial however could easily be folded into such assignments. (And probably has been, by teachers not totally under the sway of Garner's Rule.)

zwicky at-sign csli period stanford edu

Political correctness, biology and culture

Yesterday ("Two new reviews of Brizendine", 10/30/2006) I quoted Rebecca Young and Evan Balaban's description of a primal melodrama: "the foil of 'political correctness' against which the author wages a struggle for truth". Independent of the logical content of the debate over the biology and culture of sex differences, there is certainly also a larger ideological struggle, in which several intellectual armies have been campaigning for centuries. I mentioned one skirmish on one of the fronts of this war -- Paul Ekman's fight with Margaret Mead, Gregory Bateson and Ray Birdwhistell -- and promised to tell the story in another post. Here's the first installment: setting the stage for the battle.

In 1998, HarperCollins published a new edition of Charles Darwin's "The Expression of the Emotions in Man and Animals", with an Introduction, Afterword and Commentaries by Paul Ekman. Although Darwin's work is available for free on line (here, here, etc.), this book is worth buying for Ekman's additional contributions.

Ekman's Afterword is subtitled "Universality of Emotional Expression? A Personal History of the Dispute". It starts like this:

There is a story to be told. Not just the scientific story of how Darwin's views on expression were confirmed (or not) by research in the hundred years after his death. There is a story about how the clash of strong personalities, world politics, and the role of friendship and loyalty influenced the judgments of key figures in the scientific community. It is a drama that involves strong feelings and concealment, a drama not entirely over as I write, with the actors struggling over the ownership and interpretation of Charles Darwin's legacy about facial expressions of emotion.

Ekman explains that

I considered limiting myself to the scientific evidence, but if I had, readers might not understand what all the furor is about. The story involves more than Darwin's evidence, or evidence found since then. For readers to understand the controversy and make their own judgments, they need to know what is not in the scientific reports; they need to know the motives, history and social factors which influenced the principal antagonists. It was only a small group of actors. I was one of them, and I knew all the others, and am the only one still alive to tell this story.

The other key actors were Margaret Mead, Gregory Bateson, Ray Birdwhistell and Sylvan Tomkins. Ekman says of himself:

I am the last actor, entering this fray as an unknown scientist, half the age of each of these luminaries when I began my research on expression in 1965.

1965, when I started college, was a time when empiricist epistemology -- the view that what we think and feel is entirely a reflection of our experiences -- was still intellectually dominant. Ekman puts it this way:

Through the first half of this century, the behaviorists in psychology claimed that learning was responsible for all that we do and all that we are, including our attitudes and personalities. Individual differences could be wiped out if everyone had the same environment. THere would be no differences between men and women if they were only brought up in the same say. Parents were held responsible by psychiatrists for the neuroses and psychoses of their children. If they had acted differently, their offspring would be healthy, creative and productive. In education, differences in cognitive skills were attributed solely to poor schooling and impoverished home environments, with no acknowledgment that there might be inborn differences in kinds of intelligence. In anthropology, the cultural relativists triumphantly produced accounts of exotic cultures where people lived, mated and raised their offspring in ways so different from ours. The first half of the twentieth century was a time of optimism about the perfectibility of man. There was no acknowledged limit to how much human nature could be reconstructed by changing the environment. Change the state, educate the parents, modify child-rearing practices and we would have a nation of renaissance men and women. Nothing was innate. Our genes played no role in any of the differences in talent, ability or personality. Everything about our social lives was thought to be created by experience, and experiences could be changed and improved. As Margaret Mead put it in her book Sex and Temperament in Three Primitive Societies (published in 1935), 'We are forced to conclude that human nature is almost unbelievably malleable, responding accurately and contrastingly to contrasting cultural conditions.'

Ekman notes that "This one-sided viewed developed in part as a backlash against Social Darwinism, eugenics and the threat of Nazism", and quotes a passage from Margaret Mead's autobiography, where she explains that "we knew how politically loaded discussions of inborn differences could become ... [and so] ... it seemed clear to us that the further study of inborn differences would have to wait upon less troubled times".

Ekman's reaction to this ideological stance is mixed:

I sympathize with Mead's political concerns, but she had more than postponed the study of inborn differences. She had argued forcefully that biology played no role in human nature ... Her concern that racists would misuse evidence of biologically based individual difference led her to attack any claim for the biological basis of social behavior, even when biology is responsible for what unites us as a species, as in the case of univeral expressions of emotions.

For decades any scientist who emphasized the biological contributions to social behavior, who believed in an innate contribution to individual differences in personality, learning, or intelligence, was suspected of being racist. ... In that political climate the claim that facial expressions are the product of culture was accepted without evidence, but no one looked for evidence. It was obvious, it fitted so well with the reigning dogma.

Mead's student Ray Birdwhistell developed and applied the system of kinesics to describe body language, and he concluded (on the basis of microscopic analysis of very small samples of behavior from various cultures and contexts) that

As research proceeded ... it became clear that this search for universals was culture bound .. there are probably no universal symbols of emotional states.

Ekman observes that Margaret Mead prepared a 1955 edition of Darwin's Expression, in which she "included pictures from a conference on kinesics", and wrote an introduction in which she "did not say anything of Darwin's proposal that expressions are universal, nor did she mention the word 'emotion'."

Ekman concludes this section with a sentence that Geoff Pullum will appreciate:

I wonder how Darwin would have felt had he know that his book was introduced by a cultural relativist who had included in his book pictures of those most opposed to his theory of emotional expressions.

In a Language Log post a few days ago ("Embedded rhetorical questions", 10/29/2006), Geoff asked whether it's possible for "an interrogative content clause that [is] the complement of a verb like wonder [to have] rhetorical force". He proposed an artificial example, and asked readers to "send me good, clear, attested examples if you happen to spot them in texts or hear them viva voce".

I think it's clear that Ekman believes that Darwin would have indignantly repudiated Mead's introduction, and that he assumes that his sympathetic readers will believe the same thing. Thus his "I wonder how Darwin would have felt..." is actually an invitation to contemplate the way that he believes, and believes that we believe, Darwin would have felt.

Some other time , I'll sketch the fascinating scientific and personal story that unfolded on the stage that Ekman has set. For now, let me just observe that my favorite sentence, from what I've quoted so far, is this one -- presented here in a slightly abstracted form:

In that political climate the claim that ____ was accepted without evidence, but no one looked for evidence. It was obvious, it fitted so well with the reigning dogma.

Ironically, this is the same process at work when proponents of "the emerging science of sex differences" present traditional sexual stereotypes amid a flurry of irrelevant references to scientific publications. Political correctness serves no single master.

[The story continues here.]

Merely great, not unconscious

Linda Seebach reports what may be a new usage in the bad=good genre. This is from the Powerline blog ("The St. Louis Cardinals -- A Closer Look", 10/28/2006):

At the end of May, [St. Louis] had the best record in the National League. Pujols was unconscious; his numbers projected to 80 homers and 220 RBIs. He severely strained a muscle in his ribcage at the beginning of June and went on the DL. When he came back, he was merely great, not unconscious.

I've never seen this before -- if it's familiar to you, let me know.

It may be one of those sporadic, spontaneous value-inversions, like the use of "wicked retarded" that came up in an earlier LL post:

Last night the roomies and I went to Katie's for a potluck so good the food was wicked retarded. It was creepy how well everything went together everyone made dishes with fall veggies

[Update -- Jamie Dreier explains:

It's fairly common in sports talk. It's close to or synonymous with "playing over one's head". The idea is that your instincts take over, you aren't consciously controlling your movements.

My sense is that it's used more about basketball players than other athletes. Here's an example:

Not only were the Cavs a step slow, but the Pistons were playing unconscious basketball.

And from some "notes on teaching shooting to others":

You may have heard a player being referred to as "unconscious" while hitting shot after shot in a game. Great shooting has to be done from developed habits that don’t require mental preparation during the act. The game moves too fast. Habits formed for this level of use must be ingrained. This takes time, feedback, and success. A one-on-one situation allows the player to get a reaction from the teacher every time a shot is attempted. This is the fastest way to get results.

I've heard this kind of thing in basketball contexts. But from the Powerline example, it seems that the meaning has been generalized from "playing with instinctive skill" to "playing uncannily well", or something like that.

And Leigh Hunt adds:

I've seen and heard this before. I also found a relevant hit searching for "sports 'played unconsciously'".

The usage seems to reflect the contemporary sporting belief that athletes perform best in "the zone," which is supposed to be, as best I can make out, a mental state in which high-level thought yields to a kind of effortless intuition. That is, it's quite unlike "wicked retarded" in that the meaning of the negative-sounding word hasn't changed all that much--it's more that bad really is good in the given context.

Yes, I agree that this is not a bad=good example after all -- it's a different sort of semantic shift.

And not a recent one, either -- Roger "Unconscious" Shuy takes it back to neolithic times

It's not exactly clear to me what you (and Linda) find odd here. I don't take the meaning of "unconscious" as bad, since high school I've heard this to mean something really good in certain contexts, especially sports. In one school basketball game back then, for some reason every shot I took went in the basket. The coach called me "unconscious Roger" and told me to keep on doing whatever it was I was doing. He meant that I was "in a zone," using today's language for the same or similar things (unfortunately I never managed to be unconsciously good again). To be unconscious seems to mean that you can do everything right without thinking about it. Pujols is a great hitter who, at the beginning of the year, was even better, unconscious that is. Even when merely good, he's great. So I took this contrast about Pujols to be between greater (unconsciously so) and great (less so).

But then Ben Zimmer comes back with this:

I think there might actually be a family resemblance between the sports usage of "unconscious" and your "wicked retarded" example. They could be thought of as members of a larger category of approbative terms having to do with the loss of rational faculties. One could trace this category back to the "hot" jazz era of the '20s-'30s -- think of "mad" or "(stone) crazy" as terms of approbation. The lineage continued through to the hiphop era of the '80s and onwards, which has given us "ill", "sick", "stupid", "retarded", etc. In musical contexts, such terms often relate to an ethos of improvisation, as found in both hot jazz and hiphop freestyling: true creativity can only be achieved by letting go of cautious, studied technique. "Unconsciousness" in basketball or other sports would seem to mirror this abandonment of calculated effort.

]

[ And Darryl writes in with evidence to support Ben:

A possible source for wicked retarded might be related to the partying. In that context "retarded" has a meaning derived from its original meaning: "get retarded" means to dance in an uncontrolled though not swift manner, perhaps remeniscent of a seizure, hence "retarded". It's easy to see how that could be extended to mean that a party was wild ("it was retarded"), then from that it's easy to get "awesome" from it as well.

Black Eyed Peas, "Let's Get Retarded"

We got five minutes for us to disconnect from all

intellect and like the ripple effect

Bout' to lose her inhibition. Follow your intuition.

Free your inner-soul and break away from tradition.

...

Lose control, of body and soul.

Don't move too fast people, just take it slow.

...

Lose control, of body and soul.

Don't move too fast people, just take it slow.

...

Lose your mind this is the time,

Y'all test this drill, Just and bang your spine.

(Just) Bob your head like epilepsy,

up inside your club or in your Bentley.In the same song there's a number of references to not being consciously dancing or being in complete control of your movements, very similar to the use of "unconscious" in sports. BEP makes further analogy, using stupid ("stoopid"), cukoo, and ignorant ("ig'nant"), as well as the unrelated "hectic", to mean the same thing.

Also worth noting is that for a while now hip hop artists, BEP included, as well as Busta Rhymes, have used "break your neck" to mean bobbing your head while dancing, or perhaps dancing in general.

]

October 30, 2006

The Book of Lost Books

It's not all snarking here at Language Log Plaza. Sometimes we want to tell you about admirable things, or just explore data. Today I'm here to appreciate Stuart Kelly's The Book of Lost Books: An Incomplete History of All the Great Books You'll Never Read (Random House, 2005), a wry account of books destroyed, misplaced, never finished, or never even begun. From far ancient times to Sylvia Plath and Georges Perec, books have been wiped out by their authors (or their families), through accident or forgetfulness, and (far too often) in the purifying fire of ideology.

With considerable learning, Kelly covers high culture in the literate world, from Greece and Rome through China, India, the Arabic lands, Japan, later Europe (including Russia), and the U.S. (Nothing from Canada, Latin America, Africa, or Australia.) It's mostly a tale of monstrously ambitious men; of the 79 named authors, only four are women (and though you can be expected to have heard of Sappho, Jane Austen, and Sylvia Plath, you've probably never come across Faltonia Betitia Proba, from whom only one poem survives, and that's a cento, a pastiche of lines from other writers), and the women are not notably over-reaching, while most of the men had over-sized egos and aims, which of course gives something of an edge to the narratives.

Here are seven bits that caught my eye.

1. Ovid and linguistic field work:

2. Milton misplaced;

Our forgetful authors.

3. Gibbon and the great work of his life:

Earlier, visiting Rome, he had entertained the idea of writing about the decline and fall of the city. After the Swiss debacle, he returned to the Roman project, now expanded to the whole empire. Bingo.

I can't read this without a shiver. Not to mention some anxiety about whatever happened to various manuscripts of mine that never found a publishing source.

4. Scott I, the unpersuadable:

"No persuasion could arrest him" is delicious, not the least for being entirely comprehensible while also being well off the idiom of modern English.

There were two works, both still (sort of) extant in manuscript, though John Buchan in his life of Scott prays that "it may be hoped that no literary resurrectionist will ever be guilty of the crime of giving them to the world."

5. Scott II, the punctuation-free:

Omit needless words!

Relying on printers to supply punctuation is a remarkable touch. [Update: As Andrew Gray tells me, not so remarkable at all, but fairly common 18th-century practice. Authors could have a chance at fixing spelling, punctuation, and capitalization once the compositors were done with their work, but probably many did not bother.]

6. Austen begs off a task:

Jane Austen is invited by James Stanier Clarke, the prince regent's librarian, to write (following a plot outline he proposed) The Magnificent Adventures and Intriguing Romances of the House of Saxe Coburg. She demurs:

"Gloriously arch, and daringly candid", Kelly says.

7. Flaubert and taboo avoidance:

The scandale ensured healthy sales. (p. 271)

Well, of course.

[Update: Languagehat supplies a pointer to a remarkable lost book story, not in Kelly's book: Bakhtin smoking his own manuscript -- using (up) its pages as cigarette papers!]

zwicky at-sign csli period stanford period edu

Stupid timewasting insincere voicemail blather

I just called the number of an office whose identity will not be revealed because they definitely should have been open till 12 noon and not gone to lunch early, and I got the familiar slow, time-wasting, prerecorded voice ("You have reached the office of the Division of Humanities here at the University of California, Santa Cruz..."), and I was reminded of something about voicemail systems: every occurrence of "You have reached" that begins a phone conversation is a lie.

John Q. Smith at Allied Enterprises never picks up his phone and says "You have reached the desk of John Q. Smith at Allied Enterprises Incorporated, and I'm available to take your call right now." Whenever you are told over the phone that you have reached someone or something, you have not reached him or her or it.

But hey, you knew that already. You're just as irritated by voicemail as I am. I momentarily forgot that. Sorry. Just venting.

You have reached Language Log here at One Language Log Plaza, http://www.languagelog.com. Have a nice day.

By the way, people tell me I have a habit of lambasting things as

stupid and thus must be a very intolerant person. This charge is

stupi

totally unjust. I have never called anything stupid on Language

Log, except... well, let me see...

Stupid prophylactic public statement blather

Stupid wild over-the-top anti-linguist rant

Stupid time-wasting insincere voicemail blather

Stupid self-defeating warning label nonsense

Stupid machine-generated spiritual blather

Stupid title blather for language articles

Stupid bank transfer scam email

Stupid redundant warning blather

Stupid contentless political blather

Omit stupid grammar teaching

Stupid junk mail envelope blather

Stupid fake pet communication tricks

Well, all right, there have been just a very few exceptions. So who died and left me the job of being Mr Tolerant about everything, huh?

Two new reviews of Brizendine

Two very skeptical reviews of Louann Brizendine's The Female Brain have recently appeared, both under cleverly impolite headlines.

Rebecca M. Young and Evan Balaban (or the editors of Nature) make a pun on "psychoneuroendocrinology", titling their review "Psychoneuroindoctrinology" (Nature 443(7112), p. 634, October 2006). Young and Balaban are brutally critical: they say that the book "fails to meet even the most basic standards of scientific accuracy and balance", "is riddled with scientific errors", and "is misleading about the processes of brain development, the neuroendocrine system, and the nature of sex differences in general". Perhaps their most important single point is this:

Human sex differences are elevated almost to the point of creating different species, yet virtually all differences in brain structure, and most differences in behaviour, are characterized by small average differences and a great deal of male–female overlap at the individual level.

I've made free to put a copy of this review on the Language Log server, since I believe that a much broader audience will be interested in this topic than those who can justify the $199/year that I pay to subscribe.

Liz Lopatto's review in Seed Magazine has been given an even more startlingly impolite headline: "The Female Brain Fart". Lopatto went beyond checking Brizendine's references: she called up some of the cited authors, who said things like "My data don't speak at all to whether or not girls are compelled from an early age to attend to faces" (Erin McClure), and ""There is nothing in my study that seems to warrant this reference" (Ron Stoop).

Lopatto also links to a list of sexual biology posts on Language Log ("David Brooks, Neuroendocrinologist"), and then creates a sort of virtual debate between Dr. Brizendine and me:

Brizendine said, via email, that she inserted citations in order to "provide a resource for those young researchers and students who wanted to go deeper into the field of gender-specific biology," rather than to assert something conclusive.

But Liberman suggests these references actually serve to present her as an authoritative voice. While scientists publishing the results of their research in journals are held to rigorous standards of peer-review, the standards for popular science books are much more lax, Liberman said. He said he finds it disturbing that Brizendine chose to invoke the scholarly form of using citations.

"Brizendine and other authors like Leonard Sax,"—a physician and author of the 2005 book Why Gender Matters—"are interesting in that they have quite an elaborate scholarly apparatus: They have endnotes and extensive bibliographies," he said. "There's a substantial amount of credibility from credentials and employment, and the long list of references and footnotes present a certain impression about the solidity of the assertions that are made: This is not just an opinion and not just the opinion of an expert, but the backed-up opinion of an expert."

To avoid a possible misunderstanding, let me explain that I don't "find it disturbing that Brizendine chose to invoke the scholarly form of using citations". I think it's a Good Thing for works of popular science to cite their sources. What bothered me was the fact that she -- like Leonard Sax and some other writers on this topic -- cites sources that are irrelevant or even contrary to the specific (controversial or false) assertions in the text.

[By the way, I may be too old-fashioned, but I thought that Seed's headline was inappropriate. This is partly because the scatology seems like a cheap shot, but also because none of the meanings of the term "brain fart" really seems to fit this case. Sources on the internet offer glosses such as "A lapse in the thought process; an inability to think or remember something clearly" (Wiktionary); "The actual result of a braino, as opposed to the mental glitch that is the braino itself. E.g., typing dir on a Unix box after a session with DOS" (Jargon File); "An inelegant way of saying, 'I forgot,' it refers to your mind going blank. Someone may say, 'Sorry, I just had a brain fart.' It can also refer to a situation in which someone speaks 'out of turn,' especially to a superior. For example, if you march into your boss's office and speak your mind without first thinking about the possible consequences, you've just had a brain fart." (NetLingo); "Quick-and-dirty creative output. The byproduct of a mind stuffed with food for thought that can therefore produce information without effort." (WordSpy). Only the last of those has any plausible connection with Brizendine's book -- its problems appear not to be due to forgetfulness or lapses in thought, but rather to the elevation of ideology over science, and to something between sloppiness and misrepresentation in the use of references.]

I'll close with the ending of the Young and Balaban review, which expresses the culture-wars aspect of this issue clearly:

Like other popular books on the biology of human nature, The Female Brain has a rigid plot line: the foil of 'political correctness' against which the author wages a struggle for truth. We are told that the media, feminists, pointy-headed intellectuals and a vaguely specified 'culture' dogmatically insist that gender or racial differences in personality and behaviour are entirely cultural, an observation that is hard to reconcile with the volume and tone of media attention to the biology of gender and sexuality. [...]

Ultimately, this book, like others in its genre, is a melodrama. Common beliefs are recast as imperilled and then saved. Stark, predictable protagonists (an initial "cast of neuro-hormone characters" that reads like a guide to astrological signs) interact linearly with foreseeable results. The melodrama obscures how biology matters; neither hormones nor brains are pink or blue. Our attempts to understand the biology of human behaviour cannot move forward until we try to explain things as they are, not as we would like them to be.

That melodramatic plot line is sometimes a true picture of the situation -- certainly there are plenty of people who deny any human sex differences in cognition as a matter of ideological commitment rather than empirical fact -- and so perhaps there is a bit of truth in this aspect of the story told by Brizendine, Sax and others. The clearest example of such a melodrama that I know about was Paul Ekman's struggle, against the likes of Margaret Mead, Gregory Bateson and Ray Birdwhistell, to establish that human facial expressions are not socially constructed. But that's a topic for another post.

October 29, 2006

Embedded rhetorical questions

Ivano Caponigro gave an excellent talk to my university's Department of Linguistics last Friday (abstract available here). The subject was rhetorical questions. Ivano was concerned to explicate the view that the hallmark of a rhetorical question is (roughly) that its answer is not just known (or believed) but mutually known (or believed) by the participants (the utterer and the addressee). The lively discussion that followed developed a case that this needs to be rendered a little more subtly to allow for situations like (for example) a disingenuous speaker among a group of hypocrites uttering "Would any of us here today ever tell a lie?" can be a rhetorical question, inviting the answer "No, of course we wouldn't", even if the speaker knows darned well he told a lie just this morning; what is important is not that utterer and addressee should actually believe (let alone know) that the answer is in the negative, but that the intent of the question is to provoke an admission of general agreement (even if insincere) to the effect that the answer is in the negative.

That sort of stuff was the main drift of the talk and the question period. But one other point that came up briefly was Ivano's claim that you can embed rhetorical questions — an important point for his thesis that rhetorical questions are absolutely not to be understood as "really" expressing statements (a wrong-headed view that some have advanced). There was some demurral at the embedding claim; not everyone seemed to be in full agreement. I think Ivano is exactly right, though. An embedded interrogative clause (an interrogative content clause in the terminology of The Cambridge Grammar) can have the force of a rhetorical question. I mention the point here so that readers who think they can find good attested examples of this can send them to me (mail pullum at gmail.com). In what follows I will show the flavor of what I think is possible by citing a couple of constructed examples.

Imagine someone addressing a city council meeting in Santa Cruz, arguing that the city is being unfair in its enforcement of the ordinance forbidding people to sleep in a motor vehicle (along with other kinds of illicit camping within the city limits: the target is of course homeless people):

I feel I want to ask how many rich people this law has ever been applied to.

The point is that the speaker's aim, pragmatically, might not be in any sense be to ask the question that the underlined part expresses, or to wonder about what the answer to it might be, or to express the feeling of wanting to ask it. The speaker knows full well that the city has never once hauled a millionaire into court for dozing in his Lincoln Town Car while parked on West Cliff Drive after a nice dinner at Casablanca, and they know the speaker knows that, and they know the speaker knows they know, and so on. It's got the characteristics of rhetorical questions in the strongest form. The pragmatic point is to draw attention to the fact that everyone knows what the answer is, and to bring out a comforting chorus of "Hear, hear!" in agreement among the general public in the audience.

During the discussion Ivano admitted that it was hard to imagine an interrogative content clause that was the complement of a verb like wonder having rhetorical force; but I don't think he needed to make that concession. I think we can contextualize that too. Imagine a Republican candidate for Congress making a stump speech and saying this:

I'm wondering what the Democrats think Iraq would be like a month from now if we brought all our troops home today.

Everybody knows what Iraq would be like a month from now if all American troops were home by tonight. It would be the scene of a bitter and massively violent civil war between Shiite and Sunni Muslims, probably also involving the Kurds. And you can imagine the candidate knowing that everyone agrees on that point. The force of the underlined clause can be that of a rhetorical question — the intent being not to raise the question for discussion but to put out on the table the fact of the general agreement concerning what the answer is.

So that's my view: the Caponigro claim that there can be rhetorical embedded interrogative clauses is correct, possibly even more so than he thought on Friday. Do send me good, clear, attested examples if you happen to spot them in texts or hear them viva voce.

Two meta-snowclones

Arnold Zwicky points us to yesterday's Zippy the Pinhead:

And Jennifer Leo writes that

In the September-October 2006 issue of mental_floss, an article on animal learning included the gem that "rats have about as many ways of avoiding poison as Eskimos have of denying that myth about words for 'snow.'"

Finding the truth by compiling a list of falsehoods

I hear rumors that Language Log has been giving linguists a reputation for cynicism. Being myself a positive and even enthusiastic person, I prefer to think of this as the regrettable by-product of a commitment to scientific methods, as discussed in an earlier post "Hungarian speech rate and the tribunal of revolutionary empirical justice" (10/16/2006). But the modern culture of rational inquiry has roots in the humanistic tradition as well. I recently learned something new about this, which I thought I'd share with you.

In 1692, Pierre Bayle announced his intention to "compile the largest possible collection of mistakes that can be found", as a method for finding truth by exclusion:

[S]i par example j'étois venu à bout de recueillir sous le mot Seneque, tout ce qui s'est dit de faux de cet illustre Philosophe, on n'auroit qu'à consulter cet article pour savoir ce que l'on devroit croire, de ce qu'on liroit concernant Seneca dans quelque livre que ce fût: car si c'étoit un fausseté, elle seroit marquée dans le recueil, & dès qu'on ne verroit pas dans ce recueil un fait sur le pied de fausseté, on le pourroit tenir pour veritable.

If for example I had come to the end of collecting, under the word Seneca, everything false that is said about this illustrious philosopher, one would need only to consult this article in order to know what one should believe, of what one might read about Seneca in whatever book it might be: for if it were a falsehood, it would be listed in the collection, but given that one did not see a fact in this collection under the heading of falsehood, one could take it for the truth.

(from Project d'un Dictionaire Critique, 1692)

This struck me at first like a typical example of enlightenment naiveté, but then I thought: snopes.com.

And according to the entry for Pierre Bayle in the Stanford Encyclopedia of Philosophy, "for a century he was one the most widely read philosophers ever. In particular, his Dictionnaire historique et critique was the single most popular work of the eighteenth century." It's not clear to me whether this means "the single most popular work of philosophy", or "the single most popular work, period" -- and in either case, I don't know how to check the assertion, since Pierre Bayle died in 1706, and snopes.com doesn't evaluate legends about 18th-century book sales.

One small piece of positive evidence: Thomas Jefferson's 1771 list of recommended books for starting a library, sent to his brother-in-law Robert Skipwith, does include "Bayle's Dictionary. 5 v. fol. pound 7.10." under the heading History. Antient. (£7.10 was a lot of money in 1771, when a teacher in England made about £16 a year, and a "high-wage" government employee about £100, according to Jeffrey G. Williamson, "The Structure of Pay in Britain, 1710-1911", Research in Economic History, 7 (1982), 1-54. quoted here. Certainly Bayle's Dictionary is the most expensive item in Jefferson's list, outranking "Blackstone's Commentaries. 4 v. 4to. pound 4.4", and " Cuningham's Law dictionary. 2 v. fol. pound 3", and "Voltaire's works. Eng. pound 4.")

All the same, I'll confess that I'd never heard of Bayle, before reading chapter seven of Anthony Grafton's The Footnote: A curious history (1997), which devotes a chapter to Bayle's Dictionaire Critique as one of the origins of the modern footnote. Grafton dates the invention of the modern citation by quoting a mid-18th-century letter from Hume to Walpole, apologizing for "my negligence in not quoting my authorities", since "that practice ... having been once introduc'd, ought to be follow'd by every writer". Grafton comments:

This clue, the most precise we have yet turned up, indicates that we should look for the origins of the historical footnote a generation or two before Hume -- sometime around 1700, or just before. And in fact, as Lionel Gossman and Lawrence Lipking have pointed out, one of the grandest nd most influential works of late seventeenth-century historiography not only has footnotes, but largely consists of footnotes, and even footnotes to footnotes. The vast pages of that unlikely best-seller, Pierre Bayle's Historical and Critical Dictionary, offer the reader only a thin and fragile crust of text on which to cross the deep, dark swamp of commentary.

The description of Bayle's Dictionary in the Stanford Encyclopedia continues:

The content of this huge and strange, yet fascinating work is difficult to describe: history, literary criticism, theology, obscenity, and much more, in addition to philosophical treatments of toleration, the problem of evil, epistemological questions, and much more. His influence on the Enlightenment was, whether intended or not, largely subversive. Said Voltaire: “the greatest master of the art of reasoning that ever wrote, Bayle, great and wise, all systems overthrows.”

The BNF's Gallica project offers scanned copies of several of Bayle's works, including the 1692 Projet et fragmens d'un dictionnaire critique, and a later 16-volume version of the whole work, as expanded by others.

Bayle's 1692 Projet (in the form of a letter to "Mr. du Rondel, Professeur aux Belles Lettres à Maestricht") seems to me to present his personality very vividly. This is someone who would have loved to live in the 21st century, and someone I would have enjoyed knowing. So I typed in a couple of segments -- one from the beginning, and one from the middle -- to give you the flavor.

Here's a quick and careless translation of one characteristic passage::

After having read the critique of a work, we feel disabused of several false facts that we took for true in reading it. We thus change from affirmation to negation; but if we happen to read a good response to that critique, we will certainly return in certain things to our original affirmation, while on the other hand we turn to the negation of other things, which we had believed on the testimony of the critique. We experience a similar change, when we come to read a good reply to the response. Now, isn't this likely to throw most readers into continual mistrust? Who will not be suspected of falsehood, by those who don't have in their hand the key of the sources? If an author puts things forward without citing where he got them from, we can believe that he speaks only from hearsay; if he cites sources, we fear that he may report the passage badly, or that he understood it badly, since we always learn by reading a critique, that there are many similar faults in the book that is criticized. What should we do then, to remove all these reasons for mistrust, since there are so many books that have never been refuted, and so many readers who don't have the books containing the rest of the literary disputes? Shouldn't we wish that there were in the world a Critical Dictionary that we could refer to, in order to ensure that what we find in the other collections, and in every other sort of book, is truthful? This would be the touchstone of other books, and you know a man (a bit precious in his language) who will not miss the chance to call this work The Insurance Company of the Republic of Letters.

In later years, this role was taken up by the encyclopedia and the public library; today, by the internet.

Here's the longer selection that I (carelessly) typed in -- I don't have time to translate the rest:

Monsieur,

Vous serez sans doute surpris de la resolution que je viens de prendre. Je me suis mis en tête de compiler le plus gros recuiel qu'il me sera possible des fautes qui se rencontrent dan le Dictionaires, & de ne me pas renfermer dans les espaces, quelque vastes qu'ils soient, mais de faire aussi des courses sur toutes sortes d'auteurs, quand l'occasion s'en presentera. Quoy, direz-vous, un Tel de qui on attendoit toute autre chose, & beaucoup plûtôt un Ouvrage de raisonnement, qu'un Ouvrage de compilation, va s'engager à une entreprise où il faudra faire plus de depense de corps que d'esprit; c'est une très fausse demarche. Il veut corriger les Dictionaires; c'est tout ce que luy auroient pu prescrire ses plus malicieux ennemis, s'il avoient eu sur sa destinée le même pouvoir qu'avoit Eurythée sur celle d'Hercule; c'est pis qu'aller combatre le monstres; c'est vouloir nettoyer les étables d'Augias; c'est enfin la penitence que l'on devroit imposer à ses brouillions, qui ont abusé de leur loisir & de la credulité des peuples, pour annocer au nom & en l'authorité de l'Apocalypse toutes sourtes de chimeres, jussit quod splendida bilis. Je le plains; que ne laissoit-il cette occupation à ses robustes savans, qui peuvent étudier seize heures par jour sans prejudice de leur santé, infatigables en citations, & en toutes autres fonctions de Copiste, bien plus propres à faire savoir au public les choses de fait, que celles de droit?

[...]

Vous avez vû un reflexion que m'a fournie la lecture de quelques-unes de ces disputes, qui contiennent reponse, replique, duplique &c. en voicy une autre sortie de la même source. Après avoir lue la Critique d'un Ouvrage, on se croit desabusé de plusieurs faits faux, que l'on avoit pris pour vrais en le lisant. On passe donc de l'affirmation à la negation; mais si l'on vient à lire une bonne reponse à cette Critique, on ne manque gueres à l'égard de certaines choses de revenir à sa premiere affirmation, pendant que d'autre côté on passe à la negation de certaines choses, qu'on avoit crues sur la foy de cette Critique. On éprouve une semblable revolution, quand on vient à lire un bonne replique à la reponse. Or cela n'est il pas capable de jetter la plus grande partie des lecteurs dan une defiance continuelle? Qu'y a-t-il qui ne puisse devenir suspect de fausseté, à ceux qui n'ont pas en main la clef des sources? Si un Auteur avance des choses sans citer d'où il les prend, on a lieu de croire qu'il n'en parle que par oui-dire; s'il cite, on craint qu'il ne raporte mal le passage, ou qu'il ne l'entende mal, quis qu'on ne manque gueres d'aprendre par la lecture d'une Critique, qu'il y a beaucoup de pareilles fautes dan le livre critiqué. Que fair donc, Monsieur, pour ôter tous ces sujets de defiance, y ayant un si grand nombre de livres qui n'ont jamais été refutez, & un si grand nombre de lecteurs, qui n'ont pas les livres où est contenue la suitte des disputes literaires? Ne seroit-il pas à souhaitter qu'il y eût au monde un Dictionaire Critique auquel on pût avoir recours, pour être assuré si ce que l'on trouve dan les autres Dictionaires, & dans toute sorte d'autre livres est veritable? Ce seroit la pierre de touche des autres livres, & vous conoissez un homme un peu precieux dan son langage, qui ne manqueroit pas d'apeller l'ouvrage en question, La chambre des assûrances de la Republique de Lettres.

Vous voyez là en gros l'idée de mon project. J'ay dessein de composer un Dictionaire, qui outre les omissions considerables des autres, contiendra un recueil des faussetez qui concernent chaque article. Et vous voyez bien, Monsieur, que si par example j'étois venu à bout de recueillir sous le mot Seneque, tout ce qui s'est dit de faux de cet illustre Philosophe, on n'auroit qu'à consulter cet article pour savoir ce que l'on devroit croire, de ce qu'on liroit concernant Seneca dans quelque livre que ce fût: car si c'étoit un fausseté, elle seroit marquée dans le recueil, & dès qu'on ne verroit pas dans ce recueil un fait sur le pied de fausseté, on le pourroit tenir pour veritable. Cela suffit pour montrer que si ce dessein étoit bien executé, il en resulteroit un Ouvrage très-utile, & très-commode à toutes sortes de lecteurs. Je sens bien, ce me semble, ce qu'il faudroit faire pour executer parfaitement cetter entreiprise, mais je sens encore mieux que je ne suis point coapable de l'executer. C'est pourquoy je me borne à ne produire qu'une Ebauche, laissant aux personnes qui ont la capacité requise le soin de la continuation, en case qu'on juge que ce Projet, rectifié par tout où il sera necessaire, merite d'occuper la plume des habiles gens.

October 28, 2006

Evil

On Thursday, John Quiggin posted something at Crooked Timber about "European Russia". In the very first comment, "marcel" took him to grammatical task:

Reading recent posts, it’s clear nearly everyone here knows more about Eastern Europe than me,

“Than me???” C’mon John, even you know more (grammar) than that.

After another few comments, some of which were actually about the topic of John's post, "christopher m" invoked an old post of mine in John's defense:

Language Log on the “than I”/”than me” contretemps.

Linguist Mark Liberman’s conclusion (based on the most authoritative descriptive grammar of English in existence): “As is often the case with such prescriptions, the underlying grammatical analysis [that would hold ‘than me’ incorrect] is faulty.”

You can read the rest of the discussion yourself, if you want, but there was one bit of it that I found amusing. In comment #13, "dearieme" agreed with my conclusion while attacking my profession:

“than me” is not only legit, but surely massively preferable – who on earth invented the cock-and-bull story about a [do] that’s “understood”? “me and my girlfriend”, on the other hand, is tosh.

And who invented the linguists’ quasi-religious doctrine about its being evil to prescribe? And do they apply it when bringing up their own children?

I'll leave it to Arnold Zwicky to determine which self-appointed authority deserves the blame for first inventing the theory that English than never takes an immediate complement. But dearieme's questions about "the linguists" deserve an answer.

First, let me distance myself from the view -- religious or otherwise -- that it's "evil to prescribe".

- Sometimes, as in the "than me" affair, prescription is based on mistaken analysis, false history or bad logic. This is foolish, but it's not evil.

- In other cases, prescription is based on resistance to innovation. This is usually futile, but it's not evil.

- It's not clear whether discussion about performance errors of various sorts should be considered prescriptive, but it's certainly not evil. And linguists don't recommend performance errors, though we sometimes study them.

- Some prescriptive advice deals with style, tone, or communicative effectiveness. Advice of this sort may be right or wrong, useful or useless, but it's not evil. Here at Language Log,we often have advice of this kind to offer, though we're careful to distinguish linguistic norms from stylistic preferences.

- In our discussions of eggcorns, snowclones, overnegations, linguifications and so forth, it's clear that we're talking about violations of lexical, syntactic, semantic or stylistic norms. We don't recommend such violations, though we often enjoy them.

- Publications often choose a "house style" that prescribes what to do with possessive plurals and the like -- such style books disagree, and linguists (like other people) sometimes disagree with particular choices, but there's no evil here.

As for the role of linguistic prescription in "bringing up [our] own children", I feel that there's a mistaken assumption in dearieme's question. As far as I can tell, the way to help kids master the orthographic, lexical, grammatical and stylistic norms of English is to make sure that they have plenty of good examples to follow, and plenty of practice in following them. My own parents sometimes corrected my spelling and my typographical errors (this role has now been taken over by Geoff Pullum), and I can recall my mother occasionally making fun of a phrase that she thought was pompous or infelicitous, but for the most part, I learned the norms of English from reading and listening to writers and speakers that I saw as models worthy of imitation.

Teaching kids the skills of practical linguistic analysis is also probably a good thing. (And explicit instruction in spelling would surely have done me good.) But that's different from putting explicit "rules" at the center of the process -- I'm skeptical that this is either necessary or effective. And if the "rules" are the standard list of mistaken and incoherent prescriptivist bugbears, then ineffectiveness is the best you can hope for. Still, contemporary linguistic prescriptivism is not evil. Frequently foolish, usually futile, and often hypocritical, yes. Evil, no.

[Note: It's possible that "dearieme" is also a victim of the confusion that Geoff Pullum dissected in his 1/26/2005 post "'Everything is correct' versus 'nothing is relevant'".]

[Update -- Emily Bender writes:

I can think of one case where prescriptivism is evil, or at least is inspired by another evil (namely racism or classism): When speakers of minority dialects are told that their native varieties are illogical etc. because they don't conform to the (prescriptive) norms of the local standard, or worse, told that they themselves must be lacking in intellectual ability to be using such a variety. In such cases, prescriptive grammar becomes the handmaiden of institutionalized racism (or classism). It might not be the root of the evil, but it can be a means through which those in power belittle, demean or otherwise demoralize some segment of the population.

True. Though I'd question the use of the word "minority" here -- in most places and times, speakers of the favored, standard varieties of national languages have been a minority of the population, and usually a rather small one. At the risk of being prescriptive, let me suggest that we shouldn't generalize the recent usage of "minority" to mean "non-white" so that "minority" comes to mean "non-elite, common people", i.e. the majority. (More on the terminological issue here.) ]

October 27, 2006

Terrorists Target the CIIL

The Hindu is reporting that two Pakistani men arrested today as terrorists in Mysore planned to attack the Vikasa Soudha in Bangalore, a replica of the more famous Karnataka State capitol building the Vidhana Soudha that now houses the ministerial offices, and the Central Institute for Indian Languages in Mysore. I guess we should take this as a back-handed compliment - linguistics is important enough to be a target for terrorists.

Here at Language Log Plaza, where we use the grammatical function hierarchy rather than numbers or colors to denote threat levels, we are now at DEFCON "Indirect Object".

Envy, navy, whatever

Consider the lead of a recent story by Celeste Biever, "It's the next best thing to a Babel fish", New Scientist, 10/26/2006 :

Consider the lead of a recent story by Celeste Biever, "It's the next best thing to a Babel fish", New Scientist, 10/26/2006 :

Imagine mouthing a phrase in English, only for the words to come out in Spanish. That is the promise of a device that will make anyone appear bilingual, by translating unvoiced words into synthetic speech in another language.

The device uses electrodes attached to the face and neck to detect and interpret the unique patterns of electrical signals sent to facial muscles and the tongue as the person mouths words. The effect is like the real-life equivalent of watching a television show that has been dubbed into a foreign language, says speech researcher Tanja Schultz of Carnegie Mellon University in Pittsburgh, Pennsylvania.

Existing translation systems based on automatic speech-recognition software require the user to speak the phrase out loud. This makes conversation difficult, as the speaker must speak and then push a button to play the translation. The new system allows for a more natural exchange. "The ultimate goal is to be in a position where you can just have a conversation," says CMU speech researcher Alan Black.

You might not guess from this -- or from the rest of the article -- that (a) the cited research does not make any contribution to automatic translation, but rather simply attempts to accomplish practical speech recognition in a single language from surface EMG signals rather than from a microphone; (b) the (monolingual) recognition error rates from EMG signals are now at least an order of magnitude worse than from microphone input, yielding from 20-40% word errors even on simple tasks with very limited vocabularies (16 to 108 words); (c) there are significant additional problems, including signal instability from variable electrode placement, which would cause performance in real applications to be much worse, and for which no solution is now known.

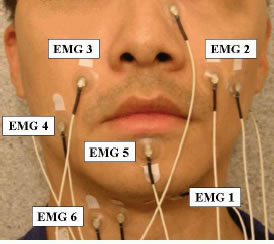

Several other news sources have picked up this story: (BBC news) "'Tower of Babel' translator made"; (BBC Newsround) "Instant translator on its way"; (inthenews.co.uk) "Device promises ability 'to speak in tongues'"; AHN: "Language Translator Being Developed by U.S. Scientists". (Discovery Channetl) "Scientists one step closer to Star Trek's 'universal translator'". As is often the case, the BBC will probably be the vector by which this particular piece of misinformation infects the world's news media. (See this link for an earlier example.) Especially interesting are the staged (or stock) photographs, with no visible wires, which the BBC chose to use to illustrate its stories (compare the picture above, which comes from one of the CMU researchers' papers):

|

|

Now the quoted people from CMU -- Tanja Schultz and Alan Black -- are first-rate speech researchers. I was at CMU 10 days ago, giving a talk in the statistics department, and I spent a fascinating hour learning about some of Alan Black's current work in speech synthesis. (I should really be telling you about that. Why have I let myself be tempting into cutting another head off of the science-journalism hydra? This is the occupational disease of blogging, I guess.) And Tanja and Alan really are involved in a team that has been doing great work on the (very hard) problem of speech-to-speech translation.

But these news stories -- like most science reporting in the popular press -- are basically fiction. This is not as bad as the cow-dialect story, in that there is actually some science and engineering behind it, not just a PR stunt. However, these stories give readers a sense of where the research team would like to get to, but no sense whatever of where the technology is right now, what contributions the recent research makes, and what the remaining problems and prospects are. It doesn't surprise me that the BBC uses the occasion as an inspiration for free-form fantasizing, but it's disappointing that New Scientist couldn't do better.

For those of you who care what the facts of the case are, here's a summary of two recent papers by the CMU group, along with links to the papers themselves.

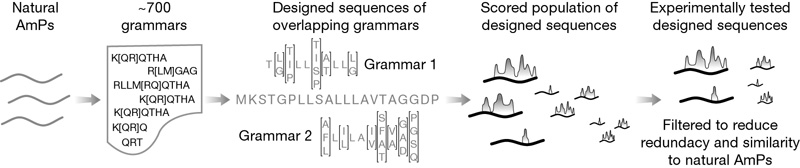

The first one is Szu-Chen Jou, Tanja Schultz, Matthias Walliczek, Florian Kraft, and Alex Waibel, "Towards Continuous Speech Recognition Using Surface Electromyography", International Conference of Spoken Language Processing (ICSLP-2006), Pittsburgh, PA, September 2006.

This paper points out the first big challenge here:

EMG signals vary a lot across speakers, and even across recording sessions of the very same speaker. As a result, the performances across speakers and sessions may be unstable.

The main reason for variability across sessions is that the signals depend on the exact positioning of the electrodes. The CMU researchers didn't tried to solve this problem, but instead avoided it:

To avoid this problem and to keep this research in a more controlled configuration, in this paper we report results of data collected from one male speaker in one recording session, which means the EMG electrode positions were stable and consistent during this whole session.

The goal of the research reported in this paper was to compare the performance in EMG-based speech recognition of different sorts of signal processing. It's worth mentioning that more is required here than just to "simply attach some wires to your neck", as one of the BBC stories puts it:

The six electrode pairs are positioned in order to pick up the signals of corresponding articulatory muscles: the levator angulis oris (EMG2,3), the zygomaticus major (EMG2,3), the platysma (EMG4), the orbicularis oris (EMG5), the anterior belly of the digastric (EMG1), and the tongue (EMG1,6) [3, 6]. Two of these six channels (EMG2,6) are positioned with a classical bipolar configuration, where a 2cm center-to-center inter-electrode spacing is applied. For the other four channels, one of the electrodes is placed directly on the articulatory muscles while the other electrode is used as a reference attaching to either the nose (EMG1) or to both ears (EMG 3,4,5). [...]

Even so, they apparently needed to place eight electrodes to get six usable signals:

...we do not use EMG5 in our final experiments because its signal is unstable, and one redundant electrode channel ... has been removed because it did not provide additional gain on top of the other six.

The recognition task was not a very hard one:

The speaker read 10 turns of a set of 38 phonetically-balanced sentences and 12 sentences from news articles. The 380 phonetically-balanced utterances were used for training and the 120 news article utterances were used for testing. The total duration of the training and test set are 45.9 and 10.6 minutes, respectively. We also recorded ten special silence utterances, each of which is about five seconds long on average. [...]

So the test set was ten repetitions of each of 12 sentences. To make it easier, they limited the decoding vocabulary to the 108 words used in those 12 sentences:

Since the training set is very small, we only trained context-independent acoustic models. Context dependency is beyond the scope of this paper. The trained acoustic model was used together with a trigram BN language model for decoding. Because the problem of large vocabulary continuous speech recognition is still very difficult for the state-of-the-art EMG speech processing, in this study, we restricted the decoding vocabulary to the words appearing in the test set. This approach allows us to better demonstrate the performance differences introduced by different feature extraction methods. To cover all the test sentences, the decoding vocabulary contains 108 words in total. Note that the training vocabulary contains 415 words, 35 of which also exist in the decoding vocabulary.

The baseline system that they adapted to use surface EMG signals as input wa the Janus Recognition Toolkit (JRTk)

The recognizer is HMM-based, and makes use of quintphones with 6000 distributions sharing 2000 codebooks. The baseline performance of this system is 10.2% WER on the official BN test set (Hub4e98 set 1), F0 condition.

That's the published 1998 HUB4 evaluation data set and one of the conditions specified in this evaluation plan. They don't report how well their baseline acoustic recognizer did on the task they posed for the surface-EMG recognizer. Given that the acoustic system that has only a 10.2% Word Error Rate on a task with multiple unknown speakers and unlimited vocabulary, my guess is that in a speaker-trained test on sentences containing 108 words known in advance, its performance should be nearly perfect.

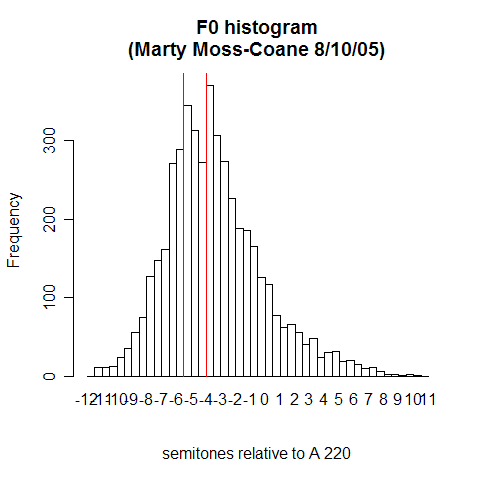

How did the surface-EMG-based recognizer do? It depended on the signal-processing method used, which was the point of the research. Here's the summary graph:

The underlying input in all cases was the set of signals coming from the surface EMG electrodes. The different bars represent the error rates given different kinds of signal processing applied to these signals. The details of the signal-processing alternatives are interesting (read the paper if you like that sort of thing, as I do), but the differences are not relevant here -- the point is that the best method they could find, adapted to the particular electrode placements of this experiment on this speaker, with decoding limited to the exact 108 words in the test set, had a word error rate of a bit over 30%.

What this shows, obviously, is that speech recognition from surface EMG signals is indeed a research problem.

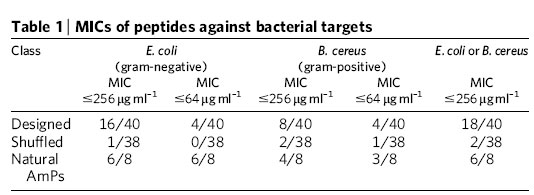

Here's the second paper: Matthias Walliczek, Florian Kraft, Szu-Chen Jou, Tanja Schultz, and Alex Waibel, "Sub-Word Unit based Non-audible Speech Recognition using Surface Electromyography", (ICSLP-2006), Pittsburgh, PA, September 2006.

This paper looks at a variety of alternative unit choices for EMG-based speech recognition: words, syllables, phonemes.

To do this, the researchers

... selected a vocabulary of 32 English expressions consisting of only 21 syllables: all, alright, also, alter, always, center, early, earning, enter, entertaining, entry, envy, euro, gateways, leaning, li, liter, n, navy, right, rotating, row, sensor, sorted, sorting, so, tree, united, v, watergate, water, ways. Each syllable is part of at least two words so that the vocabulary could be split in two sets each consisting of the same set of syllables.

This time they used two subjects, one female and one male, and recorded five sessions for each speaker. Some limitations were imposed to make the task easier (for the algorithms, not for the speakers):

In each recording session, twenty instances of each vocabulary word and twenty instances of silence were recorded nonaudible. ... The order of the words was randomly permuted and presented to the subjects one at a time. A push-to-talk button which was controlled by the subject was used to mark the beginning and the end of each utterance. Subjects were asked to begin speaking approximately 1 sec after pressing the button and to release the button about 1 sec after finishing the utterance. They were also asked to keep their mouth open before the beginning of speech, because otherwise the muscle movement pattern would be much different whether a phoneme occurs at the beginning or the middle of a word.

The first phase of testing compared the performance of the different unit sizes and features:

First the new feature extraction methods were tested. Therefore, all recordings of each word were split into two equal sets, one for training and the other for testing. This means that each word of the word list was trained on half of the recordings and tested on the other half. After testing sets were swapped for a second iteration. All combinations of the new feature extraction methods were tested, a window size of 54 ms and 27 ms, with and without time domain context feature. We tested the different feature sets on a word recognizer, a recognizer based on syllables as well as phonemes.

The results?

In other words, a speaker-dependent isolated-word recognition system, with a 32-word vocabulary, had a word error rate of about 20% using EMG signals as input. Again, the researchers don't tell us what a state-of-the-art acoustic system would do on this task -- my prediction would be an error rate in the very low single digits, roughly an order of magnitude lower than the error rate of the EMG system. Again, a demonstration that EMG-based recognition is a hard research problem -- much further from solution than an acoustically-based speech recognition, which is not an entirely solved problem either, as far as that goes.

The researchers then went ahead and tried a harder problem -- using the subword units to test words not in the training set:

While in the previous tests seen words were recognized, we test in this section on words that have not been seen in the training (unseen words). Therefore, the vocabulary was split into two disjoint sets, one training and one test set. The words in the test set consist of the same syllables as the words in the training set, so that all phonemes and syllables could be trained. For an acoustic speech recognition system training of phonemes allow the recognition of all combinations of these phonemes and so the recognition of all words consisting of these combinations. This test investigates whether EMG speech recognition performs well for context sizes used in ASR or whether the context is much more important and goes beyond triphones. To do so we tested both a phoneme based system and a syllable based system. While the syllable based system covers a larger context, the phoneme based system can obtain more training data per phoneme.

As expected, the results on unseen words were considerably worse -- around 40% word error rate on a 16-word vocabulary:

Here's the confusion matrix:

The researchers comment:

From the mapping between phonemes and muscle movements we derived that the muscle movement pattern for vocalizing the words navy and envy are quite similar (except the movement of the tongue, which is only barely detected using our setup). So the word envy is often falsely recognized as the word navy.

In an unlimited-vocabulary system, this effect will be multiplied many-fold -- leaving out the issues of stable electrode placement and acquisition of adequate training data. It's obvious that more research is needed, to say the least, before this system could be the front end to a communicatively effective conversational translation system.

[As I read the papers, the cited experiments don't test the EMG from subvocalizing -- silently mouthing words, much less thinking about silently mouthing words -- but rather the EMG generating while speaking out loud. I believe that EMG from subvocalizing will be more variable and thus harder to recognize. How much of a problem this might turn out to be is unclear.]

[Update -- Julia Hockenmaier writes, in respect to the New Scientist:

My flatmate in Edinburgh had a subscription, so I used to read this over breakfast. I don't recall ever seeing a computer-science/AI related article that didn't seem like complete fiction...

Oh well, I guess I was fooled by the packaging into thinking that this is a publication that takes science (and engineering) reporting seriously.]

[Update #2 -- Blake Stacey writes:

This may be germane to the recent talk around Language Log Plaza about New Scientist magazine, and in particular to Julia Hockenmaier's comment posted in the update. Recently, New Scientist drew a hefty amount of flak from the physics community for their reportage on the "EmDrive", the latest in a long series of machines which promise easy spaceflight at the slight cost of violating fundamental laws of nature. First to criticize the magazine was the science-fiction writer Greg Egan, whose open letter can be found here:

http://golem.ph.utexas.edu/category/2006/09/a_plea_to_save_new_scientist.html

The events which followed may be of interest to those who study how disputes unfold on the Internet. Since the discussion spread erratically around the blogosphere (touching also upon New Scientist's Wikipedia page), it is difficult to get the whole story in one place. I wrote up my perspective on the incident at David Brin's blog:

http://davidbrin.blogspot.com/2006/10/architechs-terrific-and-other-news.html

(Unfortunately, the Blogspot feature for linking directly to comments appears to be broken.)

]

October 26, 2006

The therapeutic power of rhyme

Two days ago, Scott Adams, author of Dilbert, reported some extraordinary news on his blog. Poetry has cured him. Not just any poetry, either, but rhymed and metered verse. Well, specifically, a nursery rhyme:

Jack be nimble, Jack be quick.

Jack jump over the candlestick.

Fans will be glad to know that those trochees haven't cured Scott's dyspeptic reaction to modern management, office politics, and the eternal cycle of suckers and cynics. Today's strip:

Rather, Adams was cured of an incurable disease: spasmodic dysphonia.

Here's Wikipedia on the syndrome: