March 31, 2008

Subjective tense

William Safire's most recent "On Language" column (NYT Magazine 3/30/08, p. 18) looks at the now-famous quote from Geraldine Ferraro, "If Obama was a white man, he would not be in this position." Then comes a parenthetical digression on grammar:

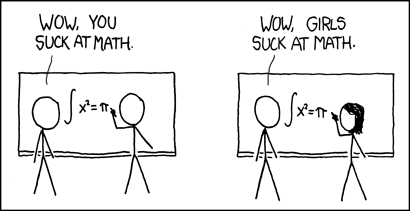

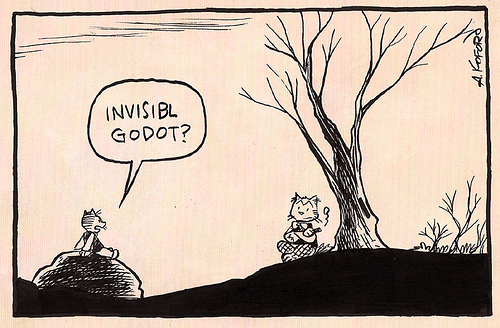

Yes, "subjective tense", in a grammar peeve. Has all our education been for naught?

Mr. Verb was on the case (or, you might say, mood) immediately:

Our most recent expedition into the land of the passive tense was led by Geoff Pullum here, quoting an earlier posting of mine about how tense gets used as an all-purpose label for a grammatical category, pretty much any grammatical category, of verbs (and maybe other parts of speech as well). My guess is that tense is just the first such technical term that people come across in school, so that's the word they use when they want to sound educated and technical. It's a kind of meta-hypercorrection.

Apparently, we haven't noted subjective for subjunctive on Language Log, though some time ago Mark Liberman and I commented on "passive gerund" for "progressive aspect", again from someone who really ought to know better.

While I'm on the subject of subjunctives, let me express amazement, once again, that so many people are so exercised about the use of the ordinary past rather than a special counterfactual form (often called "the subjunctive" or "the past subjunctive") for expressing conditions contrary to fact. The special counterfactual form is incredibly marginal: it's distinct from the ordinary past for only one verb in the language, BE, and then only with 1st and 3rd person singular subjects, so it does hardly any work. And using the ordinary past rather than the special counterfactual form virtually never produces expressions that will be misunderstood in context. Yes, you can construct examples that are potentially ambiguous out of context, but in actual practice there's almost never a problem, as you can see from two facts:

- all conditionals with past tense verb forms in them, for every single verb in the language other than BE, and for BE with 2nd person or plural subjects, are potentially ambiguous out of context, yet in actual practice, there's almost never a problem; and

- the nit-pickers are, in my experience, flawless at determining when a was in a conditional is to be understood counterfactually (and so "should be" replaced by were) -- which means that they understood the speaker's or writer's intentions perfectly.

As a result, appeals to "preserving distinctions" that are "important for communication" and to "avoiding ambiguity" are baseless and indefensible in this case. There's absolutely nothing wrong with using the special counterfactual form — I do so myself — but there's also nothing wrong with using the ordinary past to express counterfactuality. It's a matter of style and personal choice, and no matter which form you use, people will understand what you are trying to say.

But somehow preserving the last vestige of a special counterfactual form has become a crusade for some people. There are surely better causes.

Ask Language Log: Comparing the vocabularies of different languages

Michael Honeycutt writes:

I emailed Steven Pinker with a question and he told me that I should contact you.

I am a college freshman who plans to study Modern Languages and I am fascinated with linguistics. The question that I had for Dr. Pinker was in regards to the active vocabularies of the major modern languages. This may be a novice question and I apologize ahead of time. I am curious if there are any studies on the subject of the percentage of a language's active vocabulary used on a daily basis. I have been looking into this for a couple of weeks in my spare time and the information I am finding is rarely in agreement.

For example, according to Oxford University Press, in the English language a vocabulary of 7000 lemmas would provide nearly 90% understanding of the English language in current use. Of these 7000 lemmas, what percentage will the average speaker or reader experience on a daily or weekly basis? Are there any particular languages or language families that have a significantly higher or lower percentage of words encountered on a day to day basis? Are there any studies on whether, for example, speakers of Latin used more or less vocabulary in their daily lives than speakers of a modern Romance language?

Those are interesting questions. The answers are also interesting -- at least I think so -- but they aren't simple. Let me try to explain why.

The executive summary: Depending on what you count and how you count them, you can get a lot of different vocabulary-size numbers from the same text. And then once you decide on a counting scheme, there's an enormous amount of variation across speakers, writers, and styles. And in comparing languages, it's hard to decide what counts as comparable counting schemes and comparable ways to sample sources.

First, I'm going to dodge part of your question. It's hard enough to count the words that someone speaks, writes, hears, or reads in a given period of time, and to compare these counts across languages. But when you bring in a concept like "understanding" (much less "90% understanding"), you open up another shelf of cans of worms. How deep does understanding need to go? Do I understand what oak means if I only know that it's a kind of tree? Or do I need to be able to recognize the leaves and bark of some kinds of oaks, or of all kinds of oaks? Does it count as "understanding" if I can sort of figure out what a word probably means from its use in context, even if I didn't know it before? Do I "understand" a proper noun like "Emily Dickinson" or "Arcturus" simply by virtue of recognizing its category, or do I need to know something about its referent (if any)?

And if you pose the question as "the percentage of a language's active vocabulary used on a daily basis", you'll also need to define an even more elusive number, the size of "a language's active vocabulary". What are the boundaries of a "language"? What counts as "active"?

So for now, let's put aside the problem of understanding, and the whole notion of "a language's active vocabulary", and just concentrate on counting how many words people speak, write, hear, or read in their daily lives. This problem is hard enough to start with.

Consider the counting problem with respect to the text of your question. Your note uses the strings language, languages, language's. The word-count tool in MS Word will (sensibly enough) count each of these as one "word". But how many different vocabulary items -- word types -- are they? Are these three items, just as written? Or should we count the noun language plus the plural marker -s and the possessive 's? Or should we just count one item language, which happens to occur in three forms?

Your question also includes the strings am, are, be, is, was -- are these five distinct vocabulary items, or five forms of the one verb be? How about the strings weeks, weekly, day, daily? Is weekly the same vocabulary item as an adjective ("on a weekly basis") and an adverb ("published weekly")? If we analyze weekly as week + -ly and significantly as significant + -ly, are those (sometimes or always) the same -ly?

What about the noun use (in "daily use") and the participle used ("used on a daily basis"). Are those different words, or different forms of the same word? Is the participle used the same item, as a whole or in parts, as the preterite used?

Should we unpack 90% as "ninety percent" (two words) or "ninety per cent" (three words)? And is percentage a completely different vocabulary item, or is it percent (or per + cent) + -age?

Depending on the answers to these five easy questions about 17 character strings, we might count as many as 18 vocabulary items or as few as 10. And as we scan more text, this spread will grow, without any obvious bounds.

You imply a certain answer to such questions by using the term lemma, meaning "dictionary entry". But this doesn't entirely settle the matter, even for the simple questions we've been asking so far. For example, the Oxford English Dictionary has two entries for "use", one for the noun and another for the verb; while the American Heritage Dictionary has just one entry, with subentries for the noun and verb forms.

Answers to various kinds of questions about word analysis will have different quantitative impacts on word counts in different languages. For example, like most languages, English has plenty of compounds (newspaper, restroom), idioms (red herring, blue moon), and collocations (high probability vs. good chance) whose meaning and use are not (entirely) compositional. It's not obvious where to stop counting. But our decisions about such combinations will have an even bigger impact on Chinese, where most "words" are compounds, idioms, or collocations, made out of a relatively small inventory of mostly-monosyllabic morphemes (e.g. 天花板 tian hua ban "ceiling" = "sky flower board"), and where the writing system doesn't put in spaces, even ambiguously, to mark the boundaries of vocabulary items.

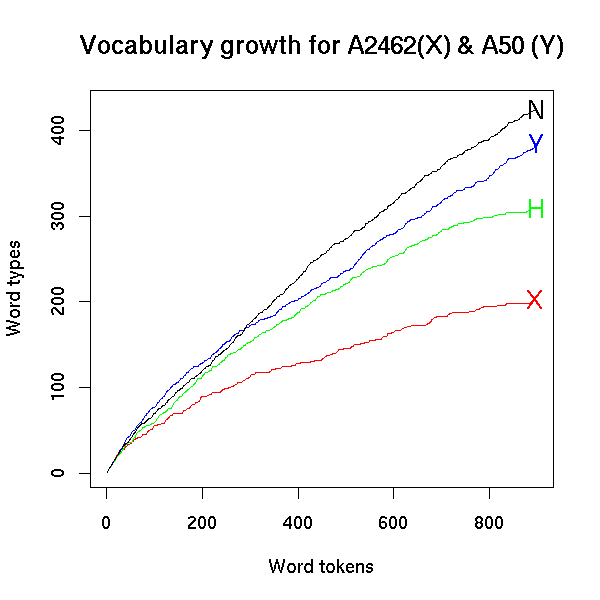

However we choose to count, we're going to get a lot individual variation. I gave some examples in "Vicky Pollard's revenge" (1/2/2007), where I compared the rate of vocabulary display of in 900 words from four different English-language sources:

The x axis tracks the increase in "word tokens", i.e. roughly what MS Word's wordcount tool would count. The y axis shows the corresponding number of "word types", which is a proxy for the number of distinct vocabulary items. Here, it's just what we get by removing case distinctions, splitting at hyphens and apostrophes, and comparing letter strings. If we used some dictionary's notion of "lemma", all the curves would be lower, but they'd still be different, and in the same general way.

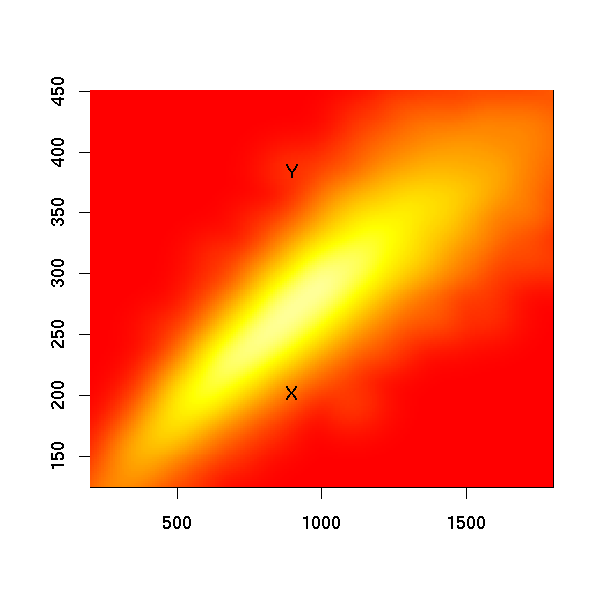

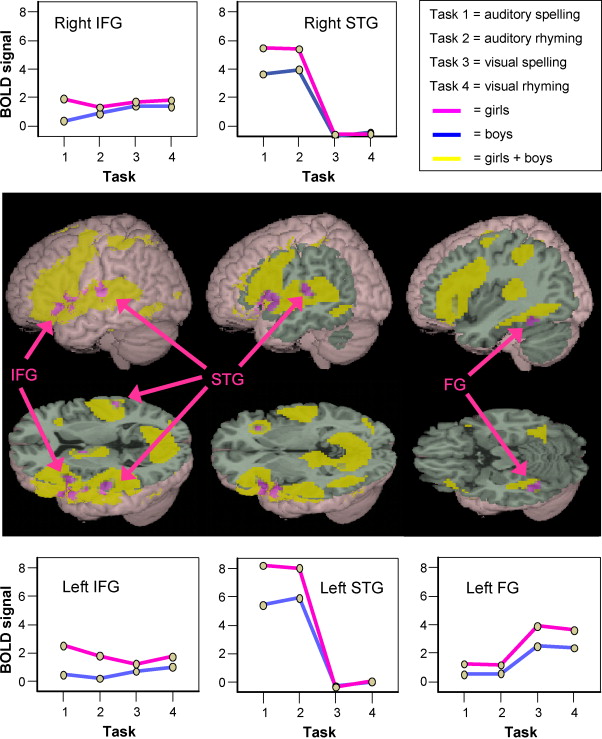

Sources X and Y are conversational transcripts; sources H and N are written texts. You can see that after 900 words, Y has displayed roughly twice as many vocabulary items as X, and that the gap between them is growing. There's a similar relationship between the written texts H and N. Given these individual differences, comparing random individual speakers or writers isn't going to be a very reliable way to characterize differences between languages. We need to look at the distribution of such type-token curves across a sampled population of speakers or writers, like this for English conversational transcripts (taken from the same Vicky Pollard post, with the endpoints of the curves for speakers X and Y superimposed):

But if we had comparable sampled distributions for Spanish or German or Arabic, we could compare the average or the quantiles or something, and answer your question, right?

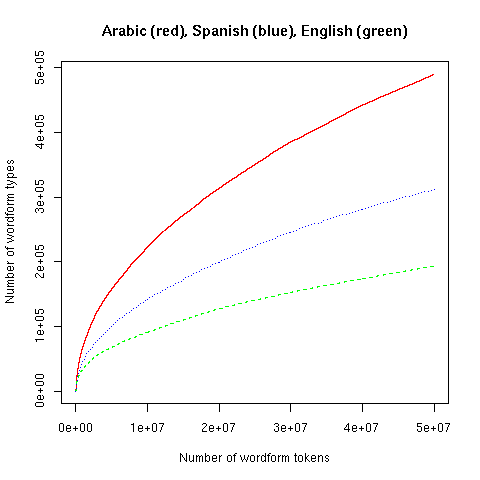

Sort of. Here are similar type-token plots for 50 million words of newswire text in Arabic, Spanish, and English:

Does this indicate that Spanish has a much richer vocabulary than English, and that Arabic is lexically even richer yet? No, it mainly tells us that Spanish has more morphological inflection than English, and Arabic still more inflection yet.

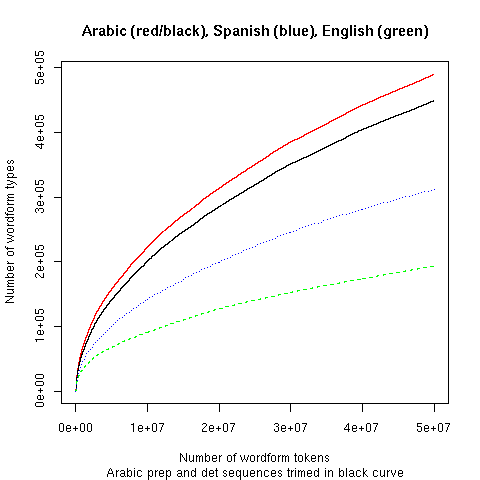

These curves also reflect some arbitrary orthographic conventions. Thus Arabic writes many word sequences "solid" that Spanish and English would separate by spaces. In particular, prepositions and determiners are grouped with following words (thus this might be aphrase ofenglish inthearabic style). Just splitting (obvious) prepositions and articles moves the Arabic curve a noticeable amount downward:

Arabic text has some other orthographic characteristics that raise its type-token curve by at least as much, such as variation in the treatment of hamza. And in large corpora in any language, the rate of typographical errors and variant spellings becomes a very significant contributor to the type-token curve.

But if we harmonized orthographic characteristics, corrected and regularized spelling, and also unpacked inflections and regular derivations, would these three curves come together? I think so, though I haven't tried it in this particular case.

But remember that different sources of speech transcriptions or written text within a given language may display vocabulary at very different rates. To characterize differences between languages, we'd have to compare distributions based on many sources in each language. However, there may be no non-circular way to choose our sources that doesn't conflate linguistic differences with socio-cultural differences.

Let's consider two extreme socio-cultural situations in which the same language is spoken:

(1) High rate of literacy, and a large proportion of "knowledge workers". Many publications aimed at various educational levels.

(2) Low rates of literacy; most of population is subsistence farmers or manual laborers. Publications aim only at intellectuals and technocrats (because they're the only literate people with money).

Given the connections between educational level and vocabulary that we see in American and European contexts, we'd expect a random sample of speakers from situation (1) to have significantly higher rates of vocabulary display than a comparable sample of speakers from situation (2). That's because speakers from (1) tend to have more years of schooling than speakers from (2).

On the other hand, we'd expect a random sample of published texts from situation (1) to have a significantly lower rate of vocabulary display than a comparable sample of texts from situation (2). That's because all the available texts in situation (2) are elite broadsheets rather than proletarian tabloids.

We could go on in this way for a whole semester, or a whole career. But please don't let me discourage you! Whatever the answers to your questions turn out to be, the search will bring up all sorts of interesting stuff. I've only scratched the surface.

If you're interested in taking this further, here are a few inadequate suggestions:

- My lecture notes on morphology from Linguistics 001.

- The Natural Language Toolkit, and the associated book. You might start with Chapter 3, Words.

- My lecture notes (from Cognitive Science 502) on "Statistical estimation for Large Numbers of Rare Events".

- Harald Baayen's book, Word Frequency Distributions.

Motivated punctuational prescriptivism

Further to my remarks about colon rage, Stephen Jones has pointed out a very reasonable structural factor that might influence the use of post-colon capitalization, regardless of the putative dialect split (between a British no-caps policy and an American pro-caps policy): capitalization is strongly motivated, he suggests, when there is more than one sentence following the colon and dependent on what is before it. Jones offers these well-chosen examples to illustrate:

- In order to protect your computer, you should do the following: run a trustworthy anti-virus system such as AVG and keep it updated.

- Computers have become easier to use in various ways since the beginning of the decade: They no longer need periodic reboots almost daily. You can run multiple programs at the same time and never run out of system resources, since that bug disappeared with Win ME. The infrastructure of the telecommunications system is much more robust than before, and dropped connections are a rarity. And finally there has been a consolidation of software vendors, which means that software now is better tested and has more resources behind it.

He comments: "In the first example what comes after the colon remains part of the previous sentence. The punctuation hierarchy of period, colon, semi-colon remains in place. In the second what comes after the colon consists of several sentences, and thus the punctuation hierarchy is broken."

I think this is exactly right. Of course, as Jones notes, one could re-punctuate the second example with semicolons for all periods except the last. But that would create a rather long and cumbersome sentence:

Computers have become easier to use in various ways since the beginning of the decade: they no longer need periodic reboots almost daily; you can run multiple programs at the same time and never run out of system resources, since that bug disappeared with Win ME; the infrastructure of the telecommunications system is much more robust than before, and dropped connections are a rarity; and finally there has been a consolidation of software vendors, which means that software now is better tested and has more resources behind it.

And it would not solve the problem in a case where one or more of the independent clauses involved independently contained a semicolon; in fact the result would be Pretty clearly ungrammatical. This can be illustrated by modifying Jones's second example to introduce independently motivated semicolons inside some of the four post-colon sentences, and then the result of semicolonization is an unpleasant structural chaos:

*Computers have become easier to use in various ways since the beginning of the decade: they no longer need periodic reboots almost daily; you can run multiple programs at the same time and never run out of system resources; that bug disappeared with Win ME; the infrastructure of the telecommunications system is much more robust than before; dropped connections are a rarity; and finally there has been a consolidation of software vendors; software now is better tested and has more resources behind it.

Try to count the separate points made after the colon now, and it is quite unclear whether it should be counted as four, five, six, or seven.

So the point is that clear signalling of structure is best achieved by capitalizing the first letter of each of the four sentences that follow the colon and are dependent on it. The four sentences are items in a list: a list of four ways in which computers have become easier to use, and it is best for them all to be capitalized rather than make the first one an exception.

Jones reminds us that there can be such a thing as an intelligently supported prescriptive recommendation about syntax and punctuation. He offers a motivated critical analysis of how the resources of the written language can best be deployed to signal structure and thus meaning. This is the sort of discussion of language that (as my Language Log colleague Geoff Nunberg has often pointed out) used to be a feature of the discussion of language for general intellectual audiences in the 17th and 18th centuries, but had all but died out by the 20th, to be replaced by shallow ranting and dialect hostility.

I would have to agree (and let me make this observation before you do!) that Language Log has occasionally carried some shallow counter-ranting against the shallow rants of the prescriptivists with whom it has disagreed. This is undeniable (especially by me). Why do we do that? Why do we follow the spirit of the times instead of standing firm against it and offering calm reason?

In my case, some of my over-the-top excoriations of ignorant and intolerant prescriptivism have had purely humorous intent. But there have perhaps been other cases in which I had decided that sometimes fire needs to be fought with fire.

The obvious objection to fighting fire with fire is that professional firefighters who are called to a house or apartment or car on fire do not do this. Flamethrowers and napalm are not standardly stowed on the fire engines; the techniques used, besides rescue equipment, are such things as high-power hoses and flame-retardant chemical foams.

However, in major forest fires, creation of firebreaks by controlled burning of specific areas is sometimes risked. I think that I (like some others at Language Log Plaza) may have felt that the 20th-century outbreak of under-informed fury and resentment that has replaced educated critical judgment about grammatical matters is more like a forest fire than a car fire.

Language Log writers do also, on many occasions, provide some calm analysis of relevant facts. That is our analog of water from hydrants and controlled spraying of chemical foam.

Ultimately, I think linguists would in general favor a return to cautious, revisable, and evidence-based criticism of prose structure. It is wildly wrong to think (as so many in the vulgar prescriptivist tradition seem to think) that descriptive linguists favor anarchy in usage and abandonment of grammatical standards. Theoretical syntax stands or falls on the distinction between what is grammatically well formed and what is not (as I have argued in detail in a recent academic paper). Without that distinction, we have no subject matter that really distinguishes us from mammalian ethologists. If everything is grammatical, it is the same as if nothing is.

I work in a department with a distinguished history of research on the earlier stages of English, where scribal error is a common source of evidence concerning the grammar and pronunciation of long-dead dialects of the Middle English or Old English periods. If everything is grammatical, then scribal error does not exist and cannot exist. What could have been seen as an interesting scribal error in a manuscript would have to be described instead as merely a piece of behavior by a hairless bipedal primate that made a sequence of marks of such-and-such shape being made in ink 900 years ago, apparently for communicative purposes.

Linguists almost always take some sequences of marks (or words, or speech sounds) to be "grammatical", i.e., clearly in conformity with a tacitly known system of principles that also defines unboundedly many other sequences of marks as being in conformity. And they take other sequences to be clearly "ungrammatical" (not in conformity). Still other sequences are of uncertain status, and provide material for debates about what exactly the principles are, and how the particular case should be judged.

The system of principles for punctuation (see The Cambridge Grammar of the English Language, Chapter 20, primarily written by Geoffrey Nunberg, Ted Briscoe, and Rodney Huddleston) is much more fixed and conventionally agreed than most aspects of spoken language, but even with punctuation there are subtleties and divergences, and debate between excellent writers, editors, and publishers concerning what the correct set of principles should actually be said to be.

Stephen Jones shows above that one of the factors that is and should be relevant to whether the first letter after the colon should be capitalized is whether the text following a colon consists of a list of two or more sentences semantically dependent on the material before the colon. He doesn't call anyone appalling or dismiss anyone as uncouth. He gives reasons for a prescriptive proposal. Good ones.

March 30, 2008

Closure

In my last posting on open vs. closed, I looked at the question of why signs on shops and the like oppose these two words, and not opened vs. closed, or open vs. close (both of which would be morphologically parallel in a way that open vs. closed is not). I assumed, but did not say explicitly, that what we want for the signs is two ADJECTIVES with appropriate meanings, and then explained that opened wouldn't do because it was pre-empted by open, and noted

And people wrote to dispute, or at least query, my claim about close. I will now try to fend off these criticisms.

Since my claim was made in the context of selecting adjectives to put on signs, I didn't go on to stipulate that what we wanted was an adjective of current English, in general use, with the appropriate meaning, and usable on its own, solo, on a sign -- that is, something people reading the signs would understand easily. To exclude the adjective close that's a homograph of the verb close, but is not in current general use in an appropriate meaning, I added a stipulation about pronunciation. Unfortunately, that wasn't enough stipulation, and a fair number of readers read the "absence" claim above out of context.

Now, into some messiness. I'll need to distinguish various lexemes spelled CLOSE by pronunciation (/klos/ vs. /kloz/), part of speech (adjective A, verb V, or noun N), and meaning. The historical story includes the following actors:

2: an A /klos/ 'closed, shut' (not at all its modern meaning), in the OED from ca. 1325;

3: a N /klos/ 'an enclosed place' (still in use, especially in British English), in the OED from 1297;

4: a N /kloz/ 'act of closing, conclusion', a N derived from the V /kloz/ (still in use), in the OED from 1399.

Item #2 has a very complex semantic history, with a variety of meanings branching off in various directions over the centuries: 'confined, narrow' (close streets), 'concealed, hidden' (close secrecy), 'private, secluded' (a close parlour), 'stifling' (close weather), [of vowels] 'pronounced with partial closing of the lips', 'stingy' (close with his money), and more; not all of these are still in use, and many of them are used only in very restricted contexts. As for the original #2, the OED's most recent cites are from 1867 (Trollope: a close carriage 'a closed carriage') and 1873 (close hatches 'closed hatches'). These have attributive (rather than predicative) uses, as do the cites for #2 going way back. But what we need for signs is a predicative adjective, and in any case the attributive uses are no longer available to modern speakers.

What we DO have in current English, in general use, and usable both predicatively and attributively, is the A /klos/ in the meaning 'near' and related senses. This is a distant descendant of #2, and it pretty much holds the field these days: ordinary dictionaries (not ones organized on historical principles, like the OED) treat it as the primary sense for the A /klos/, with other senses treated as specialized uses.

One survivor (pointed out to me by grixit on 3/28) appears in the noun close stool / close-stool (the OED's preference) / closestool, which the OED defines decorously as "a chamber utensil enclosed in a stool or box". The OED's most recent cite is from 1869, and I had thought that the noun was now archaic -- the hospitals and care facilities I've dealt with all use commode for the object in question -- but I see from some googling that it's still in use. But the A in it is pronounced /klos/, it's not usable predicatively, and it's not even clear that it has the meaning 'closed'; close-stool is an opaque idiom, not relevant to the original question about signs.

Possibly more relevant is the noun close season (pointed out to me by Cameron Majidi on 3/28). This item was new to me, but it's in the OED, in the two senses Majidi noted in his mail to me:

2. Brit. In professional sport: the period of the year when a particular sport is not played. [cites from 1890 to 2004]

The OED gives /klos/ and /kloz/ as alternative pronunciations for both British and American English. This gets us (oh dear) closer, in both pronunciation and meaning, to what we're looking for. But in both senses, close season is a fixed expression, and I assume that the A in it can't be used predicatively: *The season is close [with either pronunciation]. So there are As /klos/ and /kloz/ hanging around in the corners of modern English, but they aren't available for use on signs.

One more nomination in my mailbox: from Andrew Clegg on 3/29: close /kloz/ circuit and close /kloz/ minded, to which I can add close /kloz/ caption(ed). (From Google searches, Clegg finds the first to be primarily U.K. usage and the second to be more widespread; I believe that the third is primarily North American usage, if only because closed-caption(ed) itself is, according to the OED, originally and chiefly North American.) In each of these cases, close is a variant of standard closed in a fixed expression. As Clegg notes, the variation surely began in speech, where as Mark Liberman said a little while ago:

(and there's a huge literature on English "final t/d-deletion" in general). These spoken variants are eventually recognized in spelling (though dictionaries are slow to record the "reduced" variants), and some speakers seem to have reanalyzed some of the expressions -- so that for some people, ice tea is now understood as having the N ice as its first element. I don't know if some speakers have come to see the close /kloz/ of close circuit etc. as a new adjective. But even if they do, it appears only in certain fixed expressions and then only attributively. So, once again, it's not available for use on signs. (Well, not at the moment, so far as I can tell; who knows what might happen in the decades or centuries to come. After all, the A /klos/ 'closed' ended up with the primary sense 'near' in about 700 years.)

Let this bring this topic to a (sigh) close for now.

Well, maybe not the *first*, actually

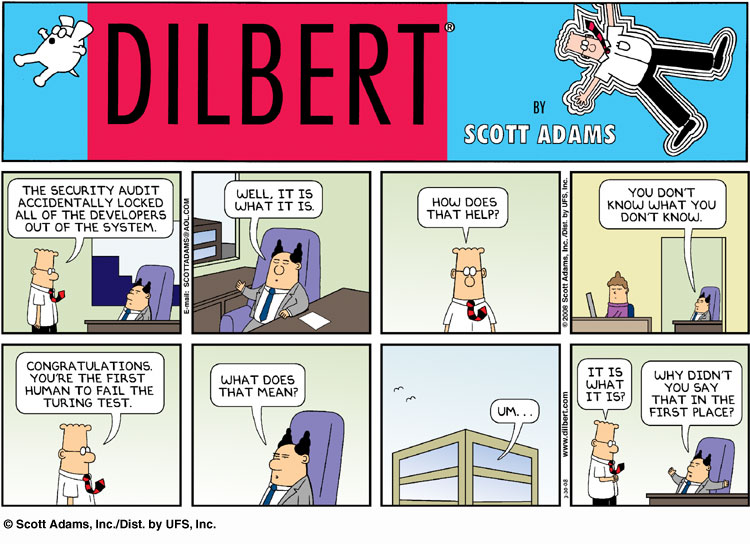

Today's Dilbert explores the hidden weakness of the Turing Test.

[John Lawler writes:

Certainly not the first, funny as it is.

Don't forget Barry and Julia, whose antics have been linked on the Chomskybot FAQ for some time.

]

[Pekka Karjalainen recommends Mark Rosenfelder's Crib Notes for the Turing Test.]

Fourniret mailbag

A few days ago, I wrote about Michel Fourniret, the "Ogre of Ardennes", an accused serial killer known for what John Lichfield in the Independent called "complex, verbose but inaccurate French, with unnecessary subjunctive verbs and sub-clauses" ("Il fallut que j'accusasse: the morphology of serial murder", 3/27/2008).

Searching the web, I was able to find only one specific example of Fourniret's linguistic style, the phrase "Il fallut bien que je l'enterrasse" ("it was indeed needful that I should bury her"). The article in Le Monde remarked on the imperfect subjunctive, but called his language "suranné et ampoulé" ("outdated and turgid"), not inaccurate. So I wondered whether Fourniret is really given to hypercorrections and other mistakes in attempting to use a register above his station, or whether he's just obnoxiously pretentious and fussy.

This brought in quite a bit of mail. As usual, I stuck the first few on the end of the post as updates. But a few days have gone by, so here's some more commentary on the same topic.

At the end of the earlier post, Alex Price suggested that the imperfect subjunctive "sounds funny" to modern French speakers because the ending -asse "recalls the -asse ending of many informal, often pejorative nouns". Coby Lubliner sent in a joke that

Your post about Michel Fourniret and his language habits reminded me of the film Panique (1947), in which Monsieur Hire, played by Michel Simon, raises the police inspector's suspicions by saying "sans que je le susse..." to which the flic responds, mockingly, "sans que vous le sussiez" (which sounds like "suciez"). I don't remember if the 1989 remake (Monsieur Hire) has this exchange.

Having to translate a joke is even more of a thankless task than having to provide a monolingual explanation for one. But here goes anyhow:

"sans que je le susse" = "without me knowing it", literally "without that I knew it", "susse" being the 1st-person singular imperfect subjunctive of savoir "know";

"sans que je le suce" = "without me sucking it", "suce" being the 1st-person singular (indicative or subjunctive) of sucer "suck";

"sans que vous le sussiez" = "without you knowing it", "sussiez" being the 2nd-person plural (or formal) imperfect subjunctive of savoir;

"sans que vous le suciez" = "without you sucking it", literally "without that you sucked it", "suciez" being the 2nd-person plural (or formal) imperfect indicative of sucer "suck".

Some readers speculated that Mr. Lichfield might have thought that the term imparfait ("imperfect") in "l'imparfait du subjonctif" was referring to correctness rather than aspect -- or tense, or whatever morphological category French imperfects really belong to these days. (A discussion of the relevant areas of current usage is here.)

But Andrew Brown wrote:

You might want to write to [John Lichfield] directly. I've known him for twenty years, and he is one of the best, most lucid and scrupulous journalists I've worked with -- in this context a survival from when the Independent was a high-minded broadsheet. I wouldn't believe much that I read in that paper today without corroboration, but Lichfield doesn't write stuff without evidence and he does speak very good French and knows the country well.

So I'll send him a note, inviting comment, if I can find an email address.

Meanwhile, Fabio Montermini sent in a pointer to some new evidence, as well as a discussion of French journalists' reaction:

If you didn't see it yet, today's Le Figaro quotes larger extracts from the letter M.F. wrote to his judges, and the journalist also provides a sort of explication de texte: ["La cour tente de briser le silence de Fourniret", 3/28/2008]. The article also provides a reproduction of Fourniret's handwritten text, so you can read a bit more of his letter. I won't make an explication de texte myself. I am not a native speaker of French, but according to my competence I don't find any inaccuracy in Fourniret's prose. Among the characteristics the journalist points out, there is the fact that M.F. uses "well known proverbs" ("proverbes rebattus") and a sometimes familiar vocabulary: "cinoche" (argot for "cinéma"), "putain", "péter". In the reproduction of the handwritten part, there are in fact a lot of fixed expressions which are almost clichés, especially from the legal language: "ratissages au peigne fin", "tous azimuths", "affaires non élucidées", etc.

French journalists' comments seem to me also very interesting. In general, the French adore high prose and beautiful style (sometimes they claim it is a reflection of the "génie" of the French language). But look at the expressions used to qualify M.F.'s prose in the article from Le Figaro: "qui tourne vite à la logorrhée", "formulation alambiquée", "style, que l'auteur veut soigné, voire ampoulé". This last phrase is significant: the journalist judges that in any case M.F.'s style cannot be genuinely "soigné", such a mean person cannot actually put "génie" in his style. It seems to me a good example of an ideological reading of language.

[Daniel Ezra Johnson sent a really bad joke:

The imperfect subjunctive isn't so uncommon, you'll see it in any bar in Canada:

BIÈRE EN FÛT

I've already explained one questionable joke in this post, so you folks are on your own with this one. Well, OK, I'll observe that in Canadian French, en fût means "on tap" -- in France, I think it would mean "in tree trunk" or something of the sort -- and I'll give you a link to the verb conjugator at wordreference.com, set up for etre. ]

Hoping to be haunted by legitimacy

According to Perry Bacon Jr. and Anne E. Kornblut, "Clinton Vows To Stay in Race To Convention", Washington Post, 3/30/2008:

"We cannot go forward until Florida and Michigan are taken care of, otherwise the eventual nominee will not have the legitimacy that I think will haunt us," said the senator from New York.

I hate to go all Kilpatrick on this, but wouldn't it be a lack of legitimacy, or perhaps a failure to achieve legitimacy, that would haunt them? As quoted, the sentence seems to me to indicate that Senator Clinton hopes to be haunted by legitimacy, and for that reason plans to stay in the race until the nominating convention in August.

For comparison, here are a few other sentences exhibiting the pattern "... will not have the X that will Y <someone>", as found on the web. In all cases, it's the X that will do the Y-ing, not the failure to have the X.

The upside is they will not have the sort of firepower that will cause the US military a lot of problems ...

The absinthe you get in the US will not have the wormwood in it that will get you "high".

... you will not always have the answers to situations that will confront you in your career/life

... people will not have had the insurance that will allow them to recover.

Josh Marshall calls this interview "pretty astonishing" ("Clinton: All The Way to Denver", TPM, 3/30/2008), although it's the politics rather than the syntax that surprises him. However, he does note an interesting point of usage:

The key quote from the interview is this one: "I know there are some people who want to shut this down and I think they are wrong. I have no intention of stopping until we finish what we started and until we see what happens in the next 10 contests and until we resolve Florida and Michigan. And if we don't resolve it, we'll resolve it at the convention -- that's what credentials committees are for."

So she's promising to remain in the race at least until June 3rd when the final contests are held in Montana and South Dakota and until Florida and Michigan are 'resolved'. Now, that can have no other meaning than resolved on terms the Clinton campaign finds acceptable. It can't mean anything else since, of course, at least officially, for the Democratic National Committee, it is resolved. The penalty was the resolution.

As we've often noted in defense of others, speaking extemporaneously in public is a hard thing to do, and occasional awkwardnesses, infelicities and downright flubs are to be expected. On the other hand, Senator Clinton's quotes in this case were apparently from remarks prepared in advance, on a topic central to her campaign. According to the WaPo article,

The Clinton campaign requested the interview Saturday to talk about how she could win and to emphasize her focus on Michigan and Florida.

So let me note that if linguistic awkardness were part of the journalistic meta-narrative about Hillary Clinton, and someone were keeping score the way Slate's Jacob Weisberg has been toting up Bushisms, this interview would certainly go into the file of Hillarities.

In fact, I've always had the impression that Senator Clinton is a skillful and well controlled speaker. Could this series of rather awkward statements be a sign of an unusual level of stress?

[Andre Mayer wondered:

Or did she say:

"We cannot go forward until Florida and Michigan are taken care of, otherwise the eventual nominee will not have the legitimacy -- that I think will haunt us," said the senator from New York."

I wondered about that myself, and looked for a video or audio clip of the interview to check, but couldn't find any. That construal would still be pretty awkward for a prepared statement, though, unless the Post's reporters mangled the quote more thoroughly than just by ignoring a clause boundary.]

Occupational eponymy

Gerry Mulhern of the Queen's University Belfast wrote a letter to Times Higher Education (2/28/08) after he looked at the list of the vice-chancellors of the Russell Group of the top (and hence most prosperous) UK research universities. He had noticed that there were two named Grant, and several other money-related names like Sterling (the honorific adjective used for the British pound), Thrift (the virtue of good budgeting), and Brink (the cash transport trucking company). He said it reminded him of the name of a director of human resources he once knew (back when Human Resources was still called Labor Relations, I expect), named Strike. The vice-chancellor of the University of Portsmouth later sent in letter (3/13/08) saying simply that he had "never had the guts to study onomastics." His surname, the signature revealed, is Craven. There is a childish joy to these odd coincidences that have given us people apparently named for their jobs (or people who obediently selected the jobs their names foretold). Eric Bakovic nearly choked up his oatmeal last December when he noticed an item about a food company executive with a name suggestive of hurling. I noticed with delight and amazement today that the name of the public relations man cited on this Arts and Humanities Research Council page is Spinner. Honestly. I swear this is not one of my little deadpan jokes. Spinner really is working as a spinner.

By the way, let me not forget to note that the current US Secretary for Education is named Spellings.

Names suited to the occupations of their owners in this way are sometimes known as aptonyms. There is a huge list of them at this site (thanks to Andrew Leventis for this). Some (like New Scientist magazine) refer to the phenomenon under the heading "nominative determinism". The New Scientist got into the business of supplying aptonyms in its Feedback column after noticing an article about incontinence in a urology journal with a truly astounding by-line that I really don't think I want to reveal to you.

Oh, all right. It was Splatt and Weedon. You have John Cowan to blame for me mentioning this (thanks, John; don't send any more).

March 29, 2008

More WTF coordinate questions

Today's find in the world of WTF coordinate questions is

We've been here before, though with a slightly less complicated example:

[A couple more examples from real life: from Paul Kay on 9/13/06, a television commercial for the over-the-counter sleeping medicine Lunesta, noted 9/11/06: Do you wake up in the night and can't go back to sleep? And from Elizabeth Daingerfield Zwicky on 2/14/08, a notice at Kepler's book store in Menlo Park CA: Do you like to knit but are looking for a meaningful project?]

Here's the problem: leaving out many important details, examples like (2) and (3) appear to have something like the structure

Instead, as I observed back in 2005, things like (2) and (3) seem to be simply the yes-no question counterparts to declaratives like

(3') Someone here has been raped and speaks English.

In a later posting I returned briefly to these WTF coordinate questions and alluded briefly to three alternative analyses for them, all of which treat them as involving a coordination of some constituent with an ELLIPTICAL subpart, rather than as being "reductions" of coordinations of full clauses. (I owe these ideas to Language Log and ADS-L readers who wrote me about my first posting and to colleagues at Stanford who commented on a presentation I gave in August 2005.) All would treat simpler coordinations like saw Kim and Sandy as [saw Kim] and [Ø Sandy] (or, better, [ saw Kim] [and [Ø Sandy]], but I'll put aside the question of where conjunctions fit into these structures), but via different formal mechanisms:

Idea 2: the Ø is an instance of "functional control", as in Kim wants Ø to leave.

Idea 3: the Ø is part of an Initially Reduced Question, as in (1) vs. (3) (cf: Ø Sandy gone yet?).

There are knotty technical questions here. I bring these three ideas up only to demonstrate that there are alternatives to the reduction idea. A little more on this below. But first, a note that standard assumptions about constituency might also be called into question. The usual assumption about (3') is that it has a coordinate VP:

A final remark on "deletion", "omission", "reduction" and similar turns of phrase. These terms, which strongly suggest an analysis in which one construction is secondary and is in some way derived from another, primary, construction via various formal operations, can be deeply misleading. (The terms are useful, maybe necessary, but in the end they're just terms, not claims to truth or any kind of analysis.) Even when the historical sequence seems clear, my current position is that once the variants are out there, they are just variants; they will share elements of their structures, true, but they'll have their own details and uses and lexical idiosyncrasies and the like. Each should be described on its own. We should take seriously the idea that "reduced coordinations" are not just full coordinations with some pieces left out, but might be constructions in their own right.

Modesty, hod-carrying, everything but relevance

Interesting to see my friends Mark Liberman and Stephen Jones arguing about whether James Kilpatrick's recent article makes good points. I was already planning to comment on my own reaction to the article: I was astounded by its sheer rambling emptiness; it was far worse than I was expecting.

Kilpatrick had a very clear mandate: he had been asked Why do we study grammar? by a first-year high school student in Oregon named Kathryn. Her question does need an answer. Kilpatrick was apparently intending to provide one. But instead he just sort of staggers about for six hundred words and then falls over and stops. Neither Mark nor Stephen has given you a proper sense of how bad the article is.

Kilpatrick's first point is that using proper grammar is like not driving into downtown Portland wearing a polka-dot bikini. (I swear I am not making this up.) The girl who wore the itsy-bitsy teeny-weeny yellow polka dot bikini in the song (it was a hit back in 1960; Kilpatrick was apparently 40 by then, and should not have even been listening to such songs) was embarrassed at having to come out of the water. Grammar is modesty, Kathryn. Cover your midriff.

He then moves to some condescending comments about the working-class speech of an imagined "hod carrier" who "don't speak no good English" but "pays the rent and, you know, it's like he treats his wife real good". He concedes that the hod carrier might do a good job of work, but... I don't know. I cannot see what that paragraph is supposed to be driving at. It goes nowhere as far as the topic of motivating grammatical study is concerned.

Next he says that the point of grammar is "to avoid being misunderstood", and drifts from there into what seems a glaringly irrelevant remark about vocabulary size ("a hundred thousand words for everyday use and half a million more for special occasions"), and tries to make it relevant by declaring that "we can put these riches to work" with grammar. Otherwise will be unable to write precise laws, persuasive sermons, or clear doll's house assembly instructions. (By the way, everything I've assembled recently has instructions that are entirely pictorial. So much for grammar.) This is the misguided view that Mark convincingly calls "transparent nonsense". It's about getting a message across effectively, and not about studying grammar.

Struggling to get back to his theme, Kilpatrick declares (getting somewhat desperate) that one reason for studying grammar is that "it is surely more fun than algebra." Apparently "once you've done one quadratic equation, you've done them all" (!). But drift sets in again, leading him to remark that "there are few ironclad 'rules' of English composition" — which apparently means there isn't much to study, undercutting his whole point.

His remaining statements are these: First, that he is not a snob, he is merely practical (this is about himself rather than grammatical study).

Second, that English grammar "has its awkward patches" but nonetheless "is a language of remarkably good order" (I do not see what these impressionistic value judgments have to do with his topic).

Third, that "irregular verbs have a pattern of irregularity" and this is exemplified by comparing Kathryn has and Kathryn had (they provide "a perfect, or at least a past perfect example", he says, bafflingly).

And fourth, in a concluding explosion of anglophone triumphalism, that "English is the greatest language ever devised for communicating thought" — the remark that Mark commented on originally, which has nothing to do with why we might or should study grammar.

And there, having hit the 600-word point without having made a single sensible remark about why we study grammar, he simply stops.

Steve says the article "is actually rather good", and even Mark says "Kilpatrick writes beautifully"; but I demur. I think Kilpatrick's little piece may be the worst piece of writing about language that I've ever seen. And the question it starts with — why we study grammar — remains to be addressed. I may have to tackle the question myself one day, because James Kilpatrick clearly has nothing to say about it.

Mongers

We have a real cheesemonger near where we live in Edinburgh: a small shop entirely devoted to cheese, with great wheels of the stuff in the window and a huge array of cheesy comestibles on offer and a genuine cheese expert in a white coat in charge and long lines of prosperous Stockbridge residents waiting outside to get in and receive their cheese advice.

We also have a genuine fishmonger a little further down into Stockbridge village, with huge ugly monkfish looking vacantly out into the street amid fantastic piles of ice, mussels, oysters, prawns, lobsters, herring, and more other slimy denizens of the deep than I could name. And it had been my intention for a while to write a witty Language Log post about the strange fact that in contemporary English (ignoring all the obsolete formations the OED includes) the combining form -monger can only be used to form words in which the first part is one of three basic household needs (cheese, fish, and iron) or one of a longer list of unsavory and frightening abstract entities (fear, gossip, hate, rumor, scandal, war, etc.). Nothing much more. (The word whoremonger, denoting the sort of person Eliot Spitzer would contact before a trip out of town, isn't really in use any more; pimp and madam have replaced it.) The form -monger isn't productively usable any more for deriving new words: you simply can't refer to a timber store as a *woodmonger, or use *meatmonger for a butcher.

But then The Onion just stole the idea for this theme out of my head and published today a highly witty news brief about a war- and fear-mongering conference. Probably better than what I could have done. Damn The Onion. Damn them.

Now what will I do? It's Saturday morning and you're all browsing Language Log to see if I'll have some little funny piece of nonsense for you, and I don't! Maybe Mark Liberman will come up with some more graphs showing that women's brains are (thank goodness) not wired all that differently from men's, or Heidi Harley will spot something really important while watching The Simpsons, or Melvyn Quince will surface with some more of whatever the hell it is that he does... We can but hope.

The values of "correct grammar"

In response to yesterday's post "James Kilpatrick, linguistic socialist", Stephen Jones writes:

I hate to have to come to Kilpatrick's defense again but his article is actually rather good. He makes two excellent points; that 'correct grammar' allows communication between people who speak different dialects, and that there must be some kind of agreed set of grammatical rules if we are to be able to interpret written laws and regulations.

Many people believe that stipulation of shared linguistic norms is essential to communication, or at least improves the efficiency and accuracy of communication. But on examination, this idea is transparent nonsense. Let me illustrate.

I'm one of the judges in the 2008 Tournament of Books at The Morning News, and The Brief Wondrous Life of Oscar Wao, by Junot Díaz, has made it to the final round. Chapter One ("GhettoNerd at the End of the World, 1974-1987") starts like this:

Our hero was not one of those Dominican cats everybody's always going on about -- he wasn't no home-run hitter or a fly bachatero, not a playboy with a million hots on his jock.

This sentence contains an instance of negative concord, a non-standard grammatical feature that isn't part of my dialect of English. But this doesn't cause me any trouble -- it wouldn't have been any easier for me to understand the sentence if Díaz had chosen to write "wasn't a home-run hitter" instead of "wasn't no home-run hitter".

The same sentence also includes several non-standard words or phrases. Cats is an antique piece of hipster slang; fly is slightly more recent; bachatero I didn't know, but it seems to mean a singer of bachatas, a kind of Dominican popular music; hots on his jock I can more or less guess. I wouldn't use any of these, and didn't even know some of them, but Díaz got his idea across, and the non-standard lexical choices are part of what he communicates.

Oscar Wao is a terrific book, but of course I could have chosen Huckleberry Finn, an even better book that's even denser with "incorrect grammar" and non-standard word usage:

You don't know about me without you have read a book by the name of The Adventures of Tom Sawyer; but that ain't no matter.

Here's a final example, of a very different kind. Seth Roberts is visiting Penn to give a talk, and on Thursday I had him over for dinner with 15 or 20 students in Ware College House, where I'm faculty master. After dinner we traded favorite-books recommendations for a while, and I suggested Gibbon's Decline and Fall. As a result, I took it off the shelf and read myself to sleep with Chapter XXVI (365-395 A.D.), "Manners of the Pastoral Nations", which reminded me of how much I like Gibbon.

But it also reminded me of the changes in English style and usage since 1776. Consider the following sentence, discussing the Roman world's reaction to the great earthquake and tidal wave "in the second year of the reign of Valentinian and Valens":

They recollected the preceding earthquakes, which had subverted the cities of Palestine and Bithynia; they considered these alarming strokes as the prelude only of still more dreadful calamities, and their fearful vanity was disposed to confound the symptoms of a declining empire and a sinking world.

No one writes like Gibbon now. This may be our loss, but it's also our reality. Along with the rhetorical differences, there are some changes in grammar and usage. We no longer use subvert in the OED's sense 1, "to overthrow, raze to the ground (a town or city, a structure, edifice)". We no longer put only after the word it limits ("the prelude only of still more dreadful calamities"), except in fixed phrases like "by appointment only".

But these differences don't get between Gibbon and me to any significant extent. I wouldn't enjoy him more, or understand him better, if someone modernized his language.

Obviously, reader and writer must share linguistic norms to some extent. I can't read the Kalevala, much as I might like to, because I don't know enough Finnish. But it's just plain silly to insist that writing must conform to James Kilpatrick's grammatical stipulations in order for Anglophone readers to be able to understand it properly.

What about Stephen's second point, that "there must be some kind of agreed set of grammatical rules if we are to be able to interpret written laws and regulations"? The trick here, as discussed in the post that Stephen is responding to, is what "agreed" means. Kilpatrick believes, or at least asserts, that the route to linguistic clarity is grammatical stipulation by self-appointed experts. I think this is naive and empirically false. Linguistic norms are examples of what Hayek called "spontaneous order", arguing against the "highly influential schools of thought which have wholly succumbed to the belief that all rules or laws must have been invented or explicitly agreed upon by somebody".

Stephen continues:

Also his point that we have 'many different vocabularies' and that the most important thing is to consider the target audience ('Know thy reader') is excellent advice.

I agree.

As I said before, Kilpatrick's problem is that he picked up his theory at a garage sale run by dysfunctional schizophrenics. What he actually recommends in practice is usually spot on.

I disagree with both points. Kilpatrick picked up his theory from the proud and dominant intellectual tradition of rationalist constructivism. It may be dysfunctional, especially as applied to language, but it ain't no garage sale.

And Kilpatrick writes beautifully, but his practical recommendations are a capricious and unpredictable mixture of sensible advice and idiosyncratic peeves.

March 28, 2008

Open and closed

In an earlier posting, I asked when closing begins and when stopping starts. There was, of course, mail on the topic. I'll comment on three responses, in three separate postings, beginning with the morphological asymmetry between the opposites open and closed. Fernando Colina asked on 19 March:

Well, a language is a system of practices, not a designed system, so some things are as they are just because of the way they developed over time; there are plenty of anomalies and irregularities in every language. On the other hand, a language is a SYSTEM of practices, including many regularities. It turns out that almost everything about open and closed is a matter of regularities; the special facts are the presence of an adjective open in the language and the absence of an adjective close (pronounced /kloz/; there is an adjective close /klos/, the opposite of far, as in "Don't Stand So Close to Me", but it's not relevant here).

I'll start with closed, which is, morphologically, the past participle (PSP) form of the verb CLOSE (also the past tense form, but it's the past participle that we're interested in here). In fact, there are two possibly relevant verbs CLOSE here:

transitive CLOSE, denoting a causing (by some agent, usually but not always human) of this change of state (I closed the gate at dusk); such verbs are sometimes called "causative-inchoative" verbs, or more often just "causative" verbs.

This pairing of homophonous verbs -- inchoative intransitive and causative transitive -- is very general in English, extending even to new formations (Palo Alto will rapidly Manhattanize 'become like Manhattan', They are rapidly Manhattanizing Palo Alto 'causing it to become like Manhattan').

Now, the PSP of a state-change verb can be used as an adjective that denotes the property of being in that state, without any implication of change. In particular, closed can be used as a "pure stative" adjective: The window is closed at the moment doesn't require that the window was ever open (it might have been built in a closed state), and The flower is closed doesn't require that the flower was ever open (it might not yet have opened, and maybe never will), and someone with a closed mind might never have had an open one (and might never have one).

Since there's no adjective close /kloz/ in English, the stative adjective closed gets to fill its slot in the pattern, serving as the opposite of the (morphologically simple) adjective open.

In addition, the PSP of a transitive verb (whether causative or not) is also used in the passive construction, as in The gate was closed by the guard at dusk. This use denotes an event, not a state.

Put those last two things together, and you get the possibility of ambiguity, between a pure state reading for a PSP and a passive reading for it: The gate was closed at dusk 'The gate was in a closed state at dusk' (stative adjective) or 'Someone closed the gate at dusk' (passive). The stative adjective use is historically older, with the passive use developed from it, but the two uses have coexisted for centuries. The ambiguity is long-standing and widespread.

A further complexity is that the PSP of a transitive verb (whether causative or not) can also be used as an adjective with the semantics of the passive. The point is subtle, but it's fairly easy to see for non-causatives (and it will become important in a little while, so I can't just disregard it). Consider The point is disputed. This could be understood as a passive, but its most natural interpretation is as asserting that the point has the property of having been (or being) disputed by some people (a sense that allows an affixal negative in un-: The point is undisputed 'No one disputes the point'). For causatives, this sort of interpretation is usually a special case of the pure stative reading, so that it's hard to appreciate that it's there.

On to open. We start with the adjective lexeme OPEN, which is a pure stative; The window is open doesn't require that it was ever closed (it might have been built that way), and The restaurant is open doesn't require that it was ever closed (it could be one of those restaurants that are always open). The adjective can serve as the base for deriving two verb lexemes, the inchoative OPEN 'become open' and the causative OPEN 'cause to become open'. The story of the PSP opened then goes much as for the PSP closed, but with an important difference. The PSP opened has a passive use, as in The gate was opened by the guard at dawn. But the stative adjective use is hard to get: The gate is opened at the moment is decidedly odd. Why?

Because English already has a way to express this meaning (and a way that's shorter and less complex than the PSP opened): the adjective open. The PSP opened in this use is PRE-EMPTED (or, if you will, PREEMPTED) by the simple adjective open. (Pre-emption is a perennial topic in morphology and lexical semantics. A textbook example: English has no causative DIE alongside inchoative DIE because it's pre-empted by causative KILL; in a sense, KILL got there first, so there's no point in creating causative DIE.)

But... in special circumstances, the PSP opened could be used as an adjective -- with the semantics of the passive, as for disputed above. In particular, The envelope is opened could be used if the envelope was not merely open (rather than closed or sealed), but gave evidences of having been opened, say by slitting with a letter opener. This is a case where open might not be specific enough, so it doesn't automatically pre-empt opened.

We end up with an opposition between the stative adjectives open and closed (the former a simple adjective, the latter a PSP). We don't use opened for the first because of pre-emption, and we don't use close /kloz/ for the second because there is no such adjective in English.

Bureaucrats

It's tax season here in America and that usually leads to lots of mumbling under the breath about those "damn bureaucrats in Washington" who make up those unreadable tax forms. Several words in the English language rise to the level of making us mad and bureaucrat seems to be one of them. When our tax filing gets challenged, we blame those nasty bureaucrats at IRS. When we're bogged down with pages of needless forms to fill out, it's the fault of those anonymous servants of the government who are the problem. When a statute is incomprehensible, it's the bureaucrat's fault, even though we might better place the blame on the legislators who wrote it in the first place.

I rise today to defend those bureaucrats. Please stop hissing and booing. Let me explain why.

I suppose I rise to defend bureaucrats because I lived in Washington DC for almost half of my life, surrounded by lots of friends and neighbors who toiled somewhere in the bowels of the federal government. Most of them were really nice folks, just like the rest of us. Sure, they made errors sometimes (just like the rest of us) and occasionally they followed those arcane regulations to the point of seeming unreasonable. But hey, that was their job. They had to. The poor souls at the Social Security Administration (SSA), Medicaid, or Health and Human Services send out notices on which they aren't even allowed to even sign their own names. These are the anonymous sloggers who dutifully work at the job they were hired to do, often without the proper tools to it well. But they do the best they can anyway.

And, as I finally get around to the subject of language (after all, this is Language Log), I have to agree that bureaucrats often write perfectly dreadful prose. But rather than grousing about this or going into another of those language rants that we are so famous for at Language Log Plaza, consider this novel idea: why not try to help with this problem?

Over the years I've worked with a number of bureaucracies, trying to help them make their documents understandable to the general public. One of my favorite cases was one brought by the National Senior Citizens Law Center (NSCLC) against the U.S. Department of Health and Human Services (HHS) over two decades ago. NSCLC charged that the notices being sent out by SSA to Medicare recipients were unclear, unhelpful, and not even readable. The case focused on one notice that was intended to inform all SSA recipients that they also might be entitled to an additional SSA benefit, Supplementary Security Income (SSI). The case wended its way through the court system and finally ended up at the U.S. Supreme Court, which ruled for the plaintiff. Legal resolutions don't often guarantee immediate action, however, and it took quite a while for SSA to get around to sending out this notice to all Social Security recipients. That notice was so badly written and incomprehensible that NSCLC threatened HHS with still another lawsuit.

It was at that point that NSCLC asked me to rewrite the offending notice so that it could be understood by recipients. They liked my revision (which was actually a totally new attempt) and they submitted it to SSA, where the director liked enough to agree to send it out, thereby fending off still another round in federal court.

The really interesting thing, however, is that the Director of SSA, recognizing a serious internal problem in her bureau, then invited me to come to the SSA home office in Baltimore to train her notice writers to produce clear and informative notices like the one I produced about SSI. I agreed, and over the following two years (1984-1986) I trained about a hundred SSA bureaucrats in six, six-week sessions (each containing 15 or more notice writers working in their main and regional offices). I can't give you the details of this training program here (if you're interested, you can read about it in my 1998 book, Bureaucratic Language in Government and Business but I can say that these bureaucrats greatly benefited from my assigned fieldwork (linguists do this a lot), finding old people to test their revisions on. When their subjects understood what they wrote, the notice writers knew they were on to something. These bureaucrats also learned about topic analysis and topic sequencing and they even became rather competent in recognizing and using speech acts in their prose. In addition they were given some rudimentary principles of semantics, pragmatics, syntax, and usage--all based on the documents they were preparing to send out to the public.

These bureaucrats were good people and good bureaucrats. But they had been caught up in the contagious rigidity of the bureaucratic prose fostered by the system. Like most of us who learn to use the language of our fields (doctors and policemen come to mind), they had no background in writing clear and effective prose and, of course, no knowledge of linguistics. But even the small dose they got in this training program seems to have brought about an important change in that bureaucracy.

I was concerned, however, about whether this training would endure. I found my answer a few years later, when I retired and started to receive Social Security benefits myself. The notices I began to get were clear and informative. Something must have worked. One nice thing about bureacracies is that it's hard to change things once they get established. This experience shows, I hope, that it's more useful to try to help with a problem than simply to throw stones at it. What these bureaucrats needed was adequate information about how they could use language effectively to do their daily jobs.

So that's why "bureaucrat" isn't such a bad word for me.

James Kilpatrick, linguistic socialist

Wikipedia describes James J. Kilpatrick as "a conservative columnist". There's good evidence for this. His syndicated column was called "A conservative view"; he was, according to Wikipedia, "a fervent segregationist" during the civil rights movement; for many years he was the conservative side of the Point-Cointerpoint segment on 60 Minutes.

And yet, in his second career as "grammarian" -- by which he means "arbiter of English usage" -- Mr. Kilpatrick promotes the linguistic equivalent of a planned economy. Linguistic rules are to be invented by experts like him, on the basis of rational considerations of optimal communication, and imposed on the rest of us. For our own good, of course.

His most recent column ("Why do we study grammar?", 3/23/2008) offers a small but telling indication of this:

In speech or in writing, English is the greatest language ever devised for communicating thought.

Linguistic chauvinism aside, let's focus on the word "devised". Compare Hayek, Law, Legislation and Liberty, Volumes 1: Rules and Order, p. 10-11:

[Constructivist rationalism] produced a renewed propensity to ascribe the origin of all institutions of culture to invention or design. Morals, religion and law, language and writing, money and the market, were thought of as having been deliberately constructed by somebody, or at least as owing whatever prefection they possessed to such design. ...

Yet ... [m]any of the institutions of society which are indisensible conditions for the successful pursuit of our conscious aims are in fact the result of customs, habits or practices which have been neither invented nor are observed with any such purpose in view. ...

Man ... is successful not because he knows why he ought to observe the rules which he does observe, or is even capable of stating all these rules in words, but because his thinking and acting are governed by rules which have by a process of selection been evolved in the society in which he lives, and which are thus the product of the experience of generations.

In contrast, most academic linguists that I know are political liberals, who would not agree with Hayek about many issues in economic and social policy. There's an apparent paradox here, perhaps related to the curious connection between less government regulation of the economy and more government regulation of morals.

(For more detailed discussion, see "Authoritarian rationalism is not conservatism", 12/11/2007; "The non-existence of Kilpatrick's Rule", 12/14/2007.)

[Andre Mayer writes:

"Grammarian" is of course James J. Kilpatrick's third career. He was a newspaper editor for many years before becoming a columnist, which may explain his prescriptive views. (I think he once endorsed Eugene McCarthy for President, which -- like his views on language -- is actually compatible with a certain kind of conservatism.)

Well, at least he co-authored a book with McCarthy, "A Political Bestiary" (sample entry here). And a piece that Kilpatrick wrote for the National Review in 1968, "An Impolitic Politician", was republished in 2005 on the occasion of McCarthy's death. From this article, I gather that Kilpatrick admired McCarthy's style, and liked him as a person. It's less clear, at least from this evidence, that he endorsed any of McCarthy's political views. An affectionate obituary ("Remembering Gene McCarthy") from The Conservative Voice supports the same conclusion.]

Furth

The University of Glasgow's Faculty of Arts promulgated in 2002 a policy (see it here) that apparently relates to transfer of credit from foreign universities. But what it says, even in the main header to the page (and I thank Judith Blair for bringing this to my attention), is that it concerns "Grades received furth of Glasgow". What the hell is furth?

The answer is that it is yet another English preposition that I had never previously encountered in my entire life.

So I am still not done with learning the prepositions of my native language, for heaven's sake, despite being (i) a current resident of Scotland (and in fact Scottish born); (ii) a native and lifelong speaker of English; (iii) well acquainted after long experience with English in the UK, the USA, and Australia; (iv) a voracious reader since the age of three; (v) a Professor of General Linguistics in the very distinguished Linguistics & English Language department at the University of Edinburgh; (vi) first author of the chapter on prepositions in The Cambridge Grammar of the English Language, and most important of all, (vii) a Senior Contributing Editor for Language Log.

Both Mark Liberman and I were surprised to come upon any English preposition that we didn't know (neither of us had run into outwith until quite recently). But another one? This is more than just interesting. This is positively embarassing. Where have these regional prepositions being lurking during all the earlier part of my life?

What furth of Glasgow means "away from or outside of Glasgow": the policy involves grades assigned by students spending time away, typically at foreign universities during a year abroad. So furth takes an of-phrase, in the way that out usually does, and outside optionally does.

Middle English expert Meg Laing points out to me that furth has the same etymology as the intransitive preposition forth. (Yes, I know, the dictionaries all call it an adverb. All published dictionaries are wrong about where to draw the line between prepositions and adverbs. See Chapter 7 of The Cambridge Grammar of the English Language.)

Though far from moribund in contemporary Standard English, forth is not common, and occurs largely in fixed phrases. More than a third of the 589 occurrences in the Wall Street Journal corpus (199 occurrences) involve the fixed phrase back and forth. Another 81 are in instances of the idiomatic and so forth, synonymous with "and so on". The others occur as complement of verb lexemes (as usual I will indicate lexemes by citing plain forms in bold italics), and they have a very uneven frequency distribution: there are 103 occurrences of set forth, 63 of put forth, and 36 of bring forth, and the others occur at much lower rates.

We find hold forth (an idiom meaning "offer opinions"), come forth, and call forth about a dozen times each, and then a large number of other verb lexemes occurring with forth rather more rarely than that, between one and eight times each. (For the record, the other verb lexemes with forth as complement are blare, blossom, body (which was a new one to me, but it occurs twice), break, bubble, burst, conjure, drive, go, gush, hiss, hold, issue, jerk, offer, pour, sally (a verb that now only occurs with forth, and yes, the comic strip Sally Forth is named after this idiom), send, set, spring, stand, step, summon, throw, thrust, thrust, tumble, and venture.)

What is relevant in the present context is that there is not a single occurrence of forth of NP meaning "away from NP". That is the development, apparently now limited to Scotland, that led to furth of Glasgow. It was once paralleled in other dialects: the Oxford English Dictionary cites examples from 1500, such as Whan your mayster is forth of towne ("when your master is out of town") where forth is spelled with an o but takes the of phrase. But it describes forth of as "Now only poet. or rhetorical, and only in lit. sense expressive of motion from within a place." The only sign of furth is as an early alternate spelling, and never with of. (Note, though, that as Jim Smith points out to me, all modern English dialects have preserved the comparative and superlative forms further and furthest.)

So that was my latest preposition-learning episode. I wonder when I will next encounter an English preposition that I have never seen before.

[Update: The mail server at Language Log Plaza is fighting a losing battle against the tide of incoming mail offering variations on the phrase "furth of the Firth of Forth". If people would like to stop mailing these in now, that would be nice. Thank you.]

March 27, 2008

Il fallut que j'accusasse: the morphology of serial murder

According to John Lichfield ("Ogre of Ardennes' stands trial for girls' murders", The Independent, 3/26/2008), Michel Fourniret, who "is accused of seven murders of girls and young women and seven sexual assaults in a 16-year reign of terror in France and Belgium between 1987 and 2003",

is a man who likes to play mind games with investigators and appear more cultured than he really is. He is a keen chess player, who talks, and writes, in complex, verbose but inaccurate French, with unnecessary subjunctive verbs and sub-clauses.

Lichfield is not the first to accuse Fourniret of linguistic peculiarities. In fact, this seems to have become part of the standard journalistic narrative. However, I haven't been able to find other evidence that the accused killer's usage is "inaccurate" as opposed to old-fashioned and excessively formal. Thus we learn from "Michel Fourniret : 'l'Ogre des Ardennes'", Le Monde, 3/11/208 that

En prison, il a beau écouter Mozart, relire André Dhôtel, citer Rilke et parler en utilisant subjonctif et plus-que-parfait, il a beau mettre un point d'honneur à corriger méticuleusement ses procès-verbaux, son sadisme au petit pied fait de lui le coupable idéal d'une kyrielle d'autres meurtres non élucidés.

Although in prison he listened to Mozart, re-read André Dhôtel, cited Rilke and spoke using the subjunctive and the pluperfect; although he made it a point of honor to meticulously edit his statements; his small-time sadism made him the ideal suspect for a litany of other unsolved murders.

And back on 7/4/2004, Le Monde ran an article by Ariane Chemin under the headline "Michel Fourniret, récits criminels à l'imparfait du subjonctif" ("Michel Fourniret, crime stories in the imperfect subjunctive"), which amplifies the generalization a bit, and gives an actual example:

Michel Fourniret (…) adore en effet les mots. Ou plus exactement la langue française. Il l’écrit sans aucune faute d’orthographe. Il utilise un français, suranné et ampoulé, plein de circonvolutions, de subjonctifs et de plus-que-parfaits.

Indeed Michel Fourniret (...) loves words. Or more exactly, the French language. He writes it without any spelling mistakes. He uses a French that is outdated and turgid, full of circumlocutions, of subjunctives and pluperfects.

Le moins que l’on puisse écrire, c'est que le Français a une haute opinion de lui-même. Il a des lettres, ce monsieur. Et il aime les faire valoir, tout particulièrement aux yeux des enquêteurs belges. Il trousse le récit de ses viols et de ses étranglements dans des imparfaits du subjonctif: « Il fallut bien que je l’enterrasse. »

The least that one can say is that this Frenchman has a high opinion of himself. He's well educated, this fellow. And he loves to emphasize it, especially in front of the Belgian investigators. He frames the tale of his rapes and his stranglings in imperfect subjunctives: "It was indeed needful that I should bury her."